What is Reinforcement Learning from Human Feedback (RLHF)?

Reinforcement Learning from Human Feedback (RLHF) is a method that integrates human insights into reinforcement learning algorithms. By using human evaluators to provide feedback, RLHF refines AI models to align better with human expectations, improving decision-making and outcomes.

How does RLHF Operate?

RLHF enhances reinforcement learning processes by incorporating human feedback in key steps:

- Feedback Collection: Humans evaluate the agent’s actions, indicating preferences or ranking outcomes.

- Reward Model Training: Feedback is used to train a model that predicts rewards, guiding the agent’s learning.

- Policy Optimization: The agent’s policy is refined to maximize rewards based on the trained reward model.

- Iterative Improvement: Continuous feedback enables ongoing refinement, improving adaptability and accuracy.

This process results in models that are more aligned with user needs and expectations.

Common Uses and Applications of RLHF

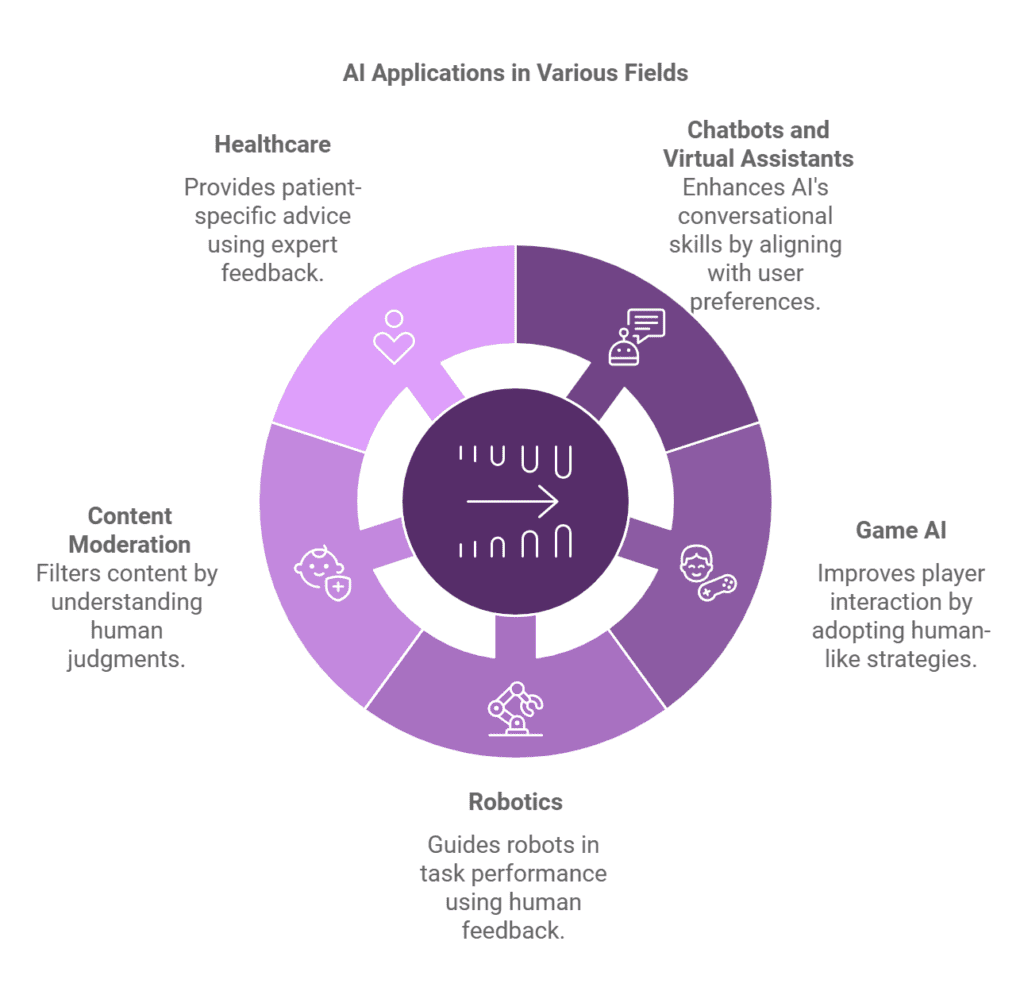

- Chatbots and Virtual Assistants: Enhances the conversational abilities of AI systems by aligning them with user preferences.

- Game AI: Trains gaming agents to adopt human-like strategies and improve player interactions.

- Robotics: Guides robots to perform tasks effectively based on human feedback.

- Content Moderation: Helps AI systems better understand human judgments for filtering content.

- Healthcare: Trains systems to provide patient-specific advice based on expert feedback.

Advantages of RLHF

- Improved Learning Efficiency: Human feedback accelerates training by offering targeted insights.

- Better Alignment with Human Preferences: Ensures that AI systems operate in ways consistent with human values.

- Enhanced Decision-Making: Results in models that make more informed and context-aware decisions.

- Adaptability: Allows models to evolve with dynamic environments and user needs.

- Ethical AI Development: Mitigates unintended behaviors by aligning learning processes with ethical considerations.

Drawbacks or Limitations of RLHF

- Dependency on Feedback Quality: Inaccurate or biased feedback can lead to suboptimal learning.

- Resource Intensity: Collecting and integrating human feedback can be time-consuming and costly.

- Scalability Challenges: Large-scale implementations may struggle to maintain consistent feedback quality.

Real-life Examples of RLHF

OpenAI utilized RLHF to enhance the performance of its language models. By training with human feedback, their systems generated more contextually relevant and coherent responses, improving overall user satisfaction.

How RLHF Compares to Traditional RL?

Traditional RL relies on predefined reward signals from the environment, while RLHF incorporates human judgments to shape these rewards. This human-guided approach enables RLHF to adapt better to complex and subjective tasks.

Expected Future Trends for RLHF

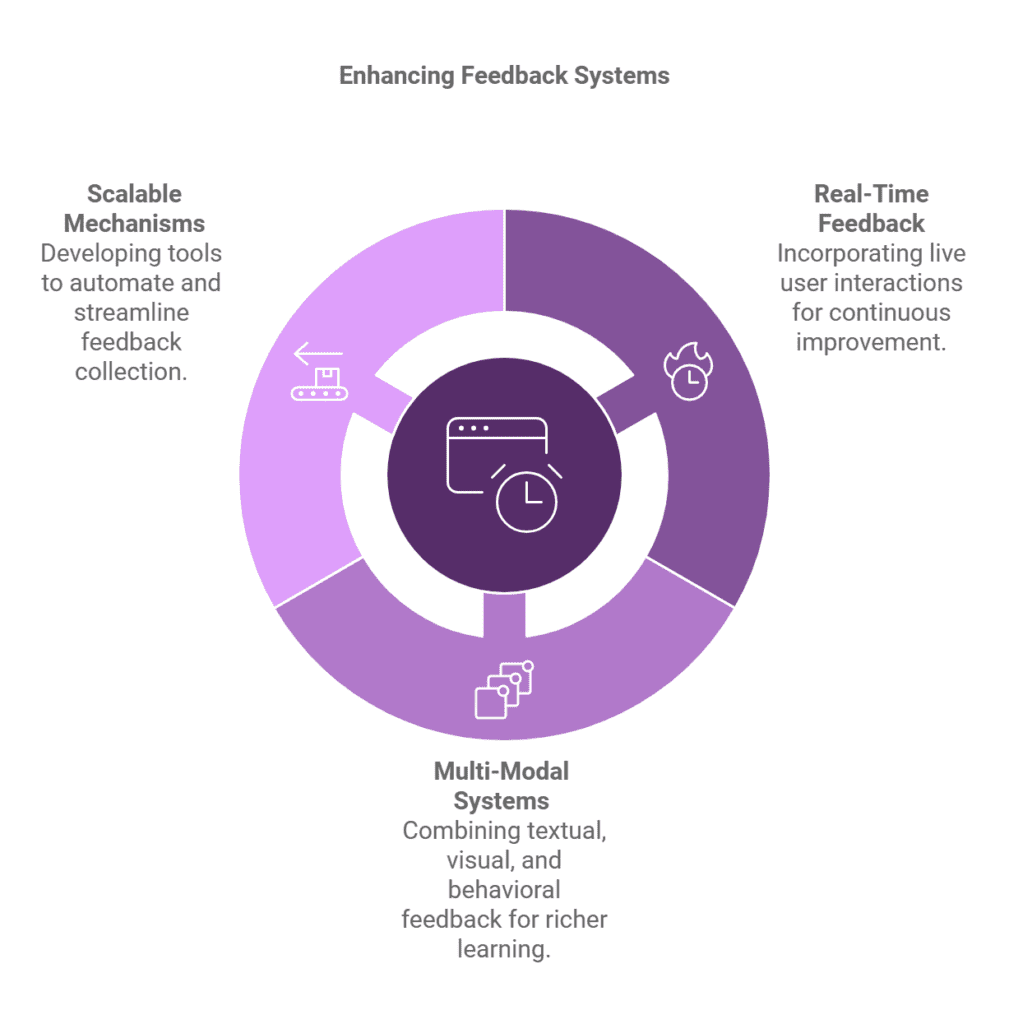

- Integration with Real-Time Feedback: Incorporating live user interactions for continuous improvement.

- Multi-modal Feedback Systems: Combining textual, visual, and behavioral feedback for richer learning.

- Scalable Feedback Mechanisms: Developing tools to automate and streamline feedback collection.

Best Practices for Implementing RLHF

- Quality Feedback: Train evaluators to provide clear and unbiased feedback.

- Iterative Refinement: Continuously collect and integrate feedback to improve system performance.

- Diversified Input: Use feedback from diverse sources to minimize biases.

Step-by-step Guide to Implementing RLHF

- Define the Task: Identify the learning objective and the role of human feedback.

- Collect Feedback: Use surveys, rankings, or interactive tools to gather feedback on the agent’s performance.

- Train the Reward Model: Use collected feedback to train a predictive reward model.

- Optimize Policy: Update the agent’s policy to maximize the predicted rewards.

- Iterate: Continuously refine the reward model and policy based on additional feedback.

Frequently Asked Questions

Q: What is the role of human feedback in RLHF?

A: Human feedback shapes the reward model, aligning the agent’s actions with human preferences.

Q: How does RLHF improve AI behavior?

A: By leveraging human insights, RLHF enables AI systems to make decisions that better align with human values and expectations.

Q: What industries benefit from RLHF?

A: RLHF is impactful in industries like healthcare, gaming, robotics, and content moderation.

Q: What challenges does RLHF address?

A: RLHF helps overcome limitations of traditional RL by handling subjective tasks and aligning learning with ethical considerations.

Related Terms

- Reinforcement Learning: The foundation of RLHF, focusing on reward-based decision-making.

- Human Feedback: Input from evaluators that guides AI behavior.

- Reward Models: Predictive models trained to align actions with desired outcomes.