The quality of your AI’s output depends entirely on the quality of your input.

Prompting is the art and science of crafting specific instructions or queries to guide an AI system toward generating desired outputs, responses, or behaviors. Think of it as giving directions to a highly capable but literal-minded foreign visitor in your city. The more precise, contextual, and well-structured your directions are, the more likely they’ll arrive exactly where you intended.

Mastering this skill is no longer optional. It’s the primary interface for controlling some of the most powerful technology on the planet. Getting it wrong means wasted time, inaccurate results, and unreliable AI behavior. Getting it right is a superpower.

What is prompting in AI?

Prompting is how we communicate our goals to a large language model (LLM) or other generative AI systems. It’s the text, image, or combination of inputs we provide to steer the model. A prompt can be a simple question like “What is the capital of France?” or a complex set of instructions with context, examples, and constraints.

How does prompting differ from traditional programming?

This is a crucial distinction. They are fundamentally different ways of getting a machine to do something.

- Programming is explicit. You write precise, logical code using a formal language like Python or C++. The computer follows your instructions exactly. If there’s an error, it’s usually a bug in your logic.

- Prompting is influential. You use natural language to guide a pre-trained model. You aren’t writing new code; you’re finding the right words to unlock a specific capability that already exists within the model’s vast knowledge.

A search query just finds existing information. A prompt asks the AI to generate something entirely new.

What are the main types of prompting techniques?

While there are dozens of variations, they mostly fall into a few key categories.

- Zero-Shot Prompting: You ask the AI to do a task without giving it any prior examples. You rely on its existing knowledge.

- Few-Shot Prompting: You include a few examples of the input-output pattern you want in the prompt itself. This gives the model a clear template to follow.

- Chain-of-Thought (CoT) Prompting: You instruct the model to “think step-by-step.” This forces it to break down complex problems into a sequence of logical steps, drastically improving its reasoning abilities.

Why is prompt engineering important for AI systems?

Prompt engineering is the discipline of designing and refining prompts. It’s important because large language models are not mind readers. A poorly structured prompt leads to:

- Generic or vague answers.

- Factual errors (hallucinations).

- Responses that ignore your constraints.

- Unsafe or biased outputs.

Effective prompt engineering, seen in tools like GitHub’s Copilot, which turns code comments into functional code, ensures the AI is reliable, accurate, and aligned with the user’s intent. Marketing platforms like Jasper build their entire business on providing users with structured prompting templates to get high-quality, specific content.

What makes a prompt effective?

An effective prompt is a clear conversation with your AI.

- Role: Specify the role like “You are an expert copywriter.”

- Task: Define the task clearly, e.g., “Write three headlines for a new brand of coffee.”

- Context: Provide necessary background like “The brand is focused on sustainability and ethical sourcing.”

- Examples: Include examples like “Good headline: ‘Sip Sustainably.’ Bad headline: ‘Get Your Caffeine Fix.'”

- Constraints & Format: Define constraints for output, e.g., “Each headline must be under 10 words. Provide the output as a numbered list.”

Clarity, context, and constraints are your best friends.

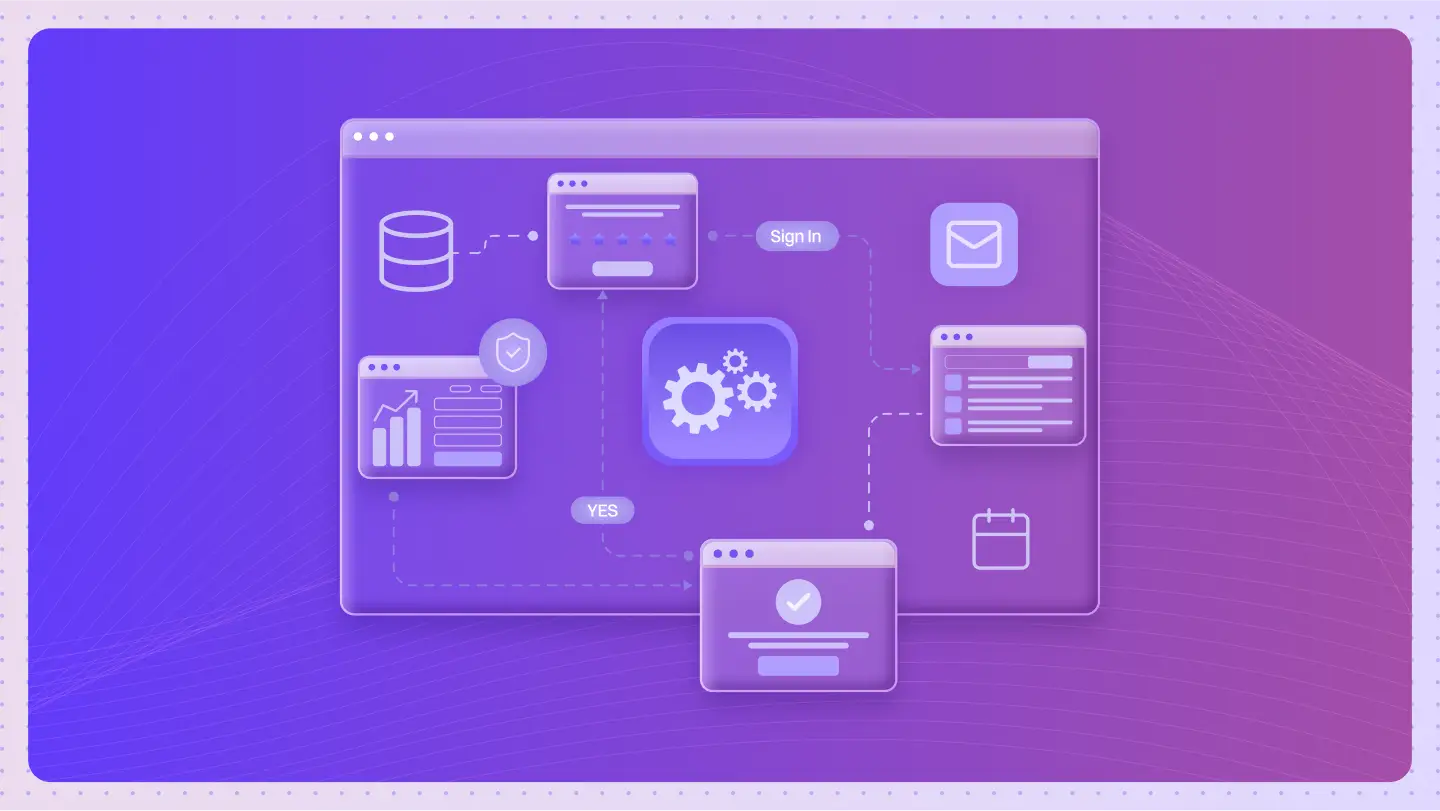

How is prompting used in AI agents specifically?

For an AI agent, a prompt isn’t just a one-off query. It’s the core of its “thought” process. An agent uses a master prompt or a series of prompts to:

- Decompose a Goal: Break a complex task into smaller, manageable sub-tasks.

- Select a Tool: Decide which tool to use next based on the sub-task.

- Reason and Reflect: Evaluate the results of its actions and decide if it’s closer to its goal or if it needs to adjust its plan.

The agent’s internal monologue is essentially a series of self-generated prompts guiding its actions.

What are the limitations of prompting?

Prompting is powerful, but not perfect.

- Brittleness: A tiny change in wording can sometimes lead to a wildly different output.

- Lack of Deep Control: You’re steering a massive, pre-existing model, not programming it from scratch. There are limits to what you can force it to do.

- Prompt Injection: Malicious users can craft prompts that trick an AI into ignoring its original instructions or revealing sensitive information.

- Consistency: Getting the exact same output format every single time can be a challenge.

What technical frameworks enhance prompting?

The core isn’t about just asking simple questions; it’s about using structured frameworks to force the AI to reason more robustly.

- Chain-of-Thought (CoT): Improve reasoning tasks by asking the AI to think step-by-step.

- Few-Shot Learning: Provide examples to guide the AI in understanding patterns.

- Retrieval-Augmented Generation (RAG): Use frameworks combining prompting with external knowledge retrieval to ground AI responses in verified information sources.

The ability to ask the right questions in the right way is a fundamental skill for unlocking their true potential.