What are Precision and Recall?

Precision and Recall are two fundamental metrics used to evaluate the performance of classification models, especially in machine learning tasks. These metrics focus on the accuracy and completeness of a model’s positive predictions:

- Precision: Measures how many of the predicted positives are truly positive. It evaluates the model’s ability to avoid false positives.

- Recall: Measures how many of the actual positives are correctly identified by the model. It assesses the model’s ability to avoid false negatives.

Balancing these metrics is critical for achieving reliable and effective model performance, particularly in applications where the cost of errors varies.

How Do Precision and Recall Work?

Precision and Recall operate based on the classification results, which are divided into four categories:

- True Positives (TP): Correctly predicted positive instances.

- False Positives (FP): Instances incorrectly predicted as positive.

- True Negatives (TN): Correctly predicted negative instances.

- False Negatives (FN): Instances incorrectly predicted as negative.

Formulas:

- Precision:Precision=TPTP+FP\text{Precision} = \frac{\text{TP}}{\text{TP} + \text{FP}}Precision=TP+FPTPPrecision answers the question: “Of all the positive predictions, how many are actually positive?”

- Recall (Sensitivity):Recall=TPTP+FN\text{Recall} = \frac{\text{TP}}{\text{TP} + \text{FN}}Recall=TP+FNTPRecall answers the question: “Of all the actual positive instances, how many did the model correctly identify?”

Balancing Precision and Recall:

- High Precision: Reduces false positives but may result in lower Recall, potentially missing some true positives.

- High Recall: Reduces false negatives but may increase false positives.

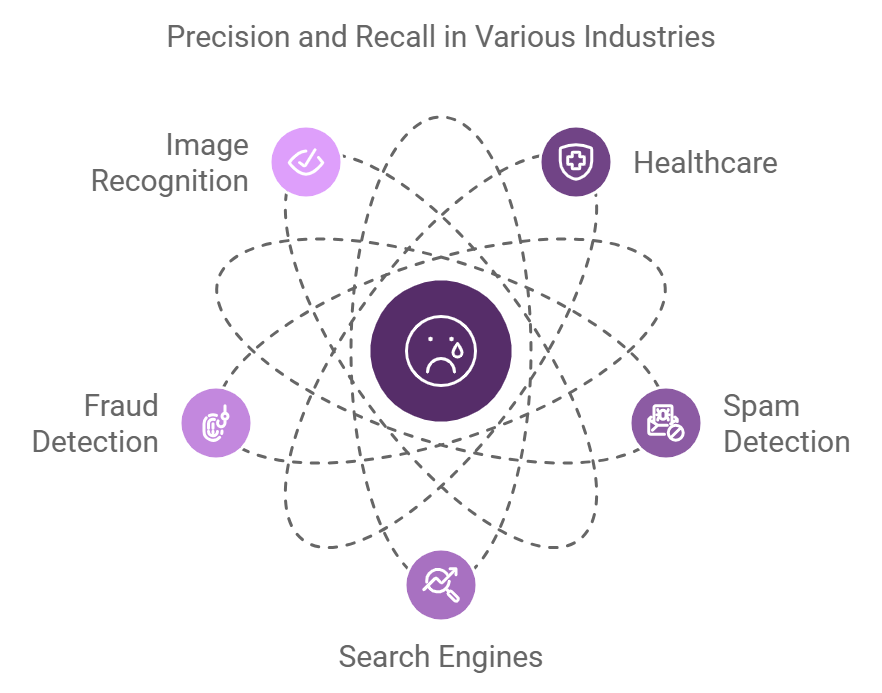

Applications of Precision and Recall

Precision and Recall are widely used across various industries to evaluate model training and performance in critical tasks:

- Healthcare:

- Use Case: Diagnosing diseases or detecting medical anomalies.

- Focus: High Recall to minimize missed diagnoses, as missing a true positive could be life-threatening.

- Spam Detection:

- Use Case: Filtering spam emails.

- Focus: High Precision to avoid misclassifying legitimate emails as spam.

- Search Engines:

- Use Case: Returning relevant results for user queries.

- Focus: Balancing Precision and Recall to ensure accurate and comprehensive search results.

- Fraud Detection:

- Use Case: Identifying fraudulent transactions.

- Focus: High Recall to ensure all potential fraud cases are flagged.

- Image Recognition:

- Use Case: Detecting objects in images.

- Focus: Balancing Precision and Recall to avoid missing objects while minimizing false detections.

Advantages of Using Precision and Recall

Precision and Recall offer several advantages for evaluating model performance:

- Granular Insights:

- Provide a detailed understanding of the types of errors (false positives and false negatives) in the model.

- Applicability to Imbalanced Datasets:

- Unlike accuracy, Precision and Recall remain effective metrics when dealing with datasets with unequal class distributions.

- Context-Aware Evaluation:

- Enable model optimization based on the specific priorities of the application (e.g., prioritizing Recall in critical medical diagnoses).

- Foundation for Other Metrics:

- Serve as the basis for metrics like the F1 Score, which combines Precision and Recall into a single measure.

Challenges and Limitations

Despite their utility, Precision and Recall come with some challenges:

- Trade-Offs:

- Increasing Precision often reduces Recall, and vice versa. Finding the right balance depends on the problem context.

- Class Imbalance:

- In datasets with imbalanced classes, these metrics may not fully capture performance without additional context.

- Threshold Dependence:

- Precision and Recall vary based on the decision threshold, requiring careful tuning for optimal results.

- No Single Metric:

- Precision and Recall alone may not provide a complete picture of model performance, necessitating the use of complementary metrics like F1 Score or ROC-AUC.

Real-Life Example: Fraud Detection in Finance

A financial institution implemented a classification model to detect fraudulent transactions:

- Objective: Minimize false negatives (missed fraud cases) while controlling false positives (legitimate transactions flagged as fraud).

- Results:

- By optimizing for high Recall, the institution reduced missed fraud cases by 40%.

- Using Precision as a secondary focus, they maintained customer satisfaction by minimizing false alarms.

This example illustrates how balancing Precision and Recall can enhance the effectiveness of critical applications.

Precision and Recall vs. F1 Score

| Aspect | Precision & Recall | F1 Score |

|---|---|---|

| Focus | Provide separate insights into false positives and false negatives | Combines Precision and Recall into a single metric |

| Use Case | Useful for understanding trade-offs | Ideal for summarizing performance |

| Interpretation | Requires analysis of both metrics | Provides a quick overview |

| Threshold Sensitivity | Sensitive to threshold changes | Aggregates performance across thresholds |

While Precision and Recall offer detailed insights, the F1 Score is helpful when a single performance metric is required.

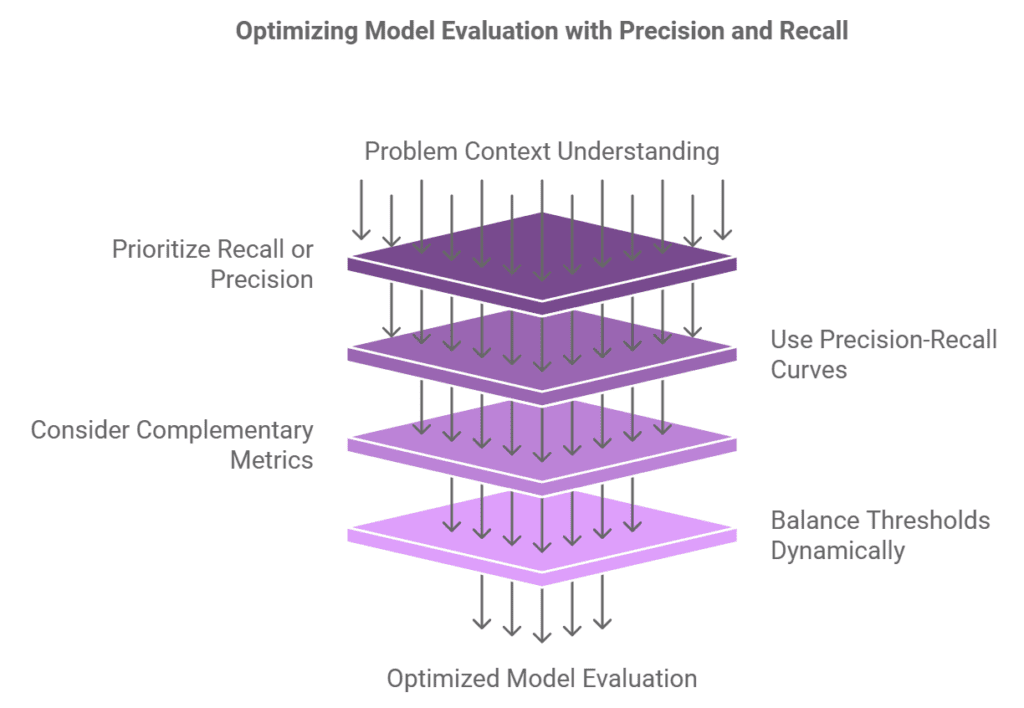

Best Practices for Using Precision and Recall

To effectively leverage Precision and Recall in model evaluation:

- Understand the Problem Context:

- Prioritize Recall in safety-critical applications, and Precision in scenarios where false positives are costly.

- Use Precision-Recall Curves:

- Visualize the trade-off between Precision and Recall across thresholds to determine the optimal balance.

- Consider Complementary Metrics:

- Combine with metrics like the F1 Score or ROC-AUC for a holistic view of model performance.

- Balance Thresholds Dynamically:

- Adjust decision thresholds to align with specific business or operational goals.

Future Trends in Precision and Recall

The role of Precision and Recall in AI and machine learning will continue to evolve, with emerging trends including:

- Advanced Evaluation Techniques:

- Integration with contextual metrics to address class imbalance and varying error costs.

- Automated Threshold Optimization:

- AI-driven tools that dynamically adjust thresholds based on real-time data.

- Domain-Specific Metrics:

- Development of custom metrics tailored for specific industries or applications.

Conclusion: Mastering Precision and Recall for Better Models

Precision and Recall are indispensable tools for evaluating and fine-tuning classification models. By understanding and balancing these metrics, data scientists and machine learning engineers can build models that align with the unique needs of their applications.

Whether you’re developing a spam filter, fraud detection system, or medical diagnostic tool, mastering Precision and Recall ensures reliable, accurate, and impactful AI solutions.