What is Model Deployment?

Model Deployment refers to the process of integrating a machine learning model into a production environment where it can make predictions and drive decision-making using real-world data. This critical phase operationalizes models, ensuring they are accessible, functional, and continuously monitored for performance.

By employing effective model deployment strategies, organizations can seamlessly integrate machine learning into their workflows, leveraging predictive capabilities to improve efficiency and outcomes.

How Does Model Deployment Work?

Model deployment transforms a trained machine learning model into a usable asset by enabling it to interact with production systems and real-time data. Here’s how the process unfolds:

- Preparation:

- Validate the model’s performance using test data to ensure accuracy and reliability.

- Optimize the model for deployment by reducing latency and ensuring scalability.

- Environment Setup:

- Select an appropriate deployment environment:

- Cloud-Based Deployment: For scalability and flexibility.

- On-Premises Deployment: For security and compliance.

- Edge Deployment: For real-time processing on devices like IoT sensors.

- Select an appropriate deployment environment:

- Deployment Strategy:

- Choose strategies like A/B testing, canary releases, or blue-green deployment to minimize risk during rollout.

- Integration:

- Integrate the model with existing systems using APIs or custom middleware for seamless data flow and user interaction.

- Monitoring and Maintenance:

- Implement monitoring tools to track performance metrics such as latency, accuracy, and throughput.

- Detect model drift and retrain the model as needed to maintain performance.

- Scaling:

- Use deployment tools for load balancing and autoscaling to handle varying traffic levels efficiently.

This systematic approach ensures that machine learning models not only function optimally but also deliver sustained value over time.

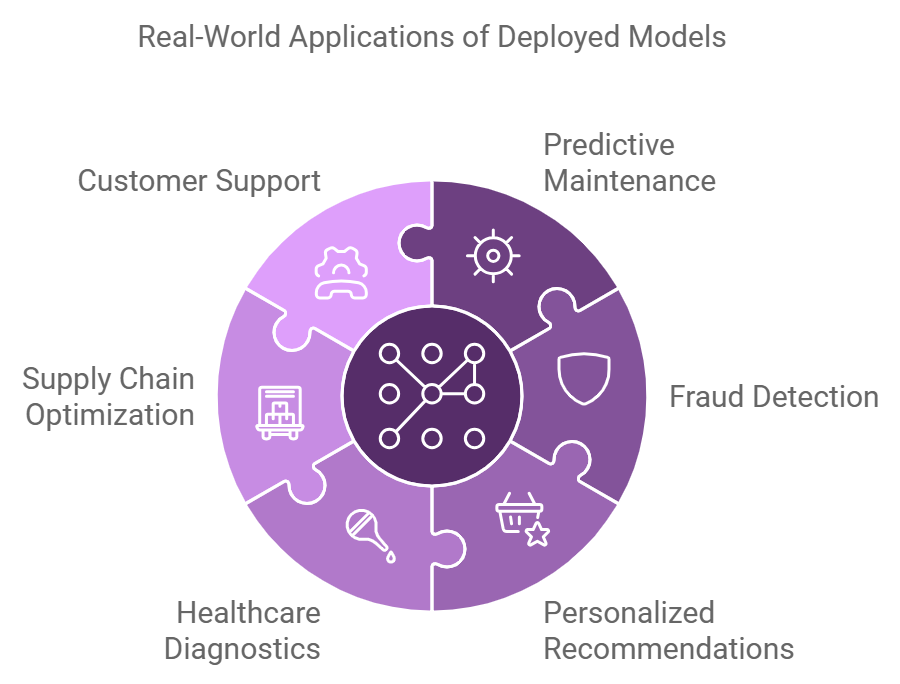

Applications of Model Deployment

Model deployment is foundational to operationalizing machine learning across industries. Here are some real-world applications:

- Predictive Maintenance:

- In manufacturing, deployed models predict equipment failures, reducing downtime and maintenance costs.

- Fraud Detection:

- Financial institutions deploy models to analyze transactions in real-time, identifying suspicious activities.

- Personalized Recommendations:

- E-commerce platforms use deployed models to suggest products or services based on user behavior.

- Healthcare Diagnostics:

- Deployed models assist in diagnosing diseases by analyzing medical imaging and patient data.

- Supply Chain Optimization:

- Retailers leverage deployed models for demand forecasting and inventory management.

- Customer Support:

- AI-driven chatbots powered by deployed models provide instant, accurate responses to user queries.

Through effective deployment, machine learning models can deliver actionable insights and drive impactful outcomes across these domains.

Benefits of Model Deployment

The deployment of machine learning models provides organizations with a range of advantages, including:

- Seamless Integration:

- Ensures smooth interaction between models and existing systems.

- Scalability:

- Supports dynamic scaling to handle increased traffic and workloads.

- Faster Time to Market:

- Reduces delays in transitioning from development to production, accelerating value realization.

- Operational Efficiency:

- Automates model training, monitoring and maintenance, freeing up resources for other tasks.

- Improved Collaboration:

- Enhances coordination between data scientists, developers, and IT teams.

- Real-Time Insights:

- Provides timely predictions and decisions, crucial for industries like finance and healthcare.

By employing robust deployment tools and strategies, organizations can maximize the performance and reliability of their machine learning models.

Challenges of Model Deployment

Despite its importance, model deployment comes with challenges:

- Integration Complexity:

- Models must be adapted to work with diverse systems and data pipelines.

- Model Drift:

- Over time, models may lose accuracy as data distributions change, requiring retraining.

- Infrastructure Demands:

- Ensuring the infrastructure meets the computational requirements of the model can be resource-intensive.

- Monitoring and Maintenance:

- Continuous monitoring is essential to detect issues like latency, errors, or reduced accuracy.

Addressing these challenges involves proactive planning, effective communication between teams, and the use of automation wherever possible.

Real-Life Example: Fraud Detection in Banking

A leading financial institution successfully deployed machine learning models for fraud detection:

- Challenge: Real-time monitoring of millions of transactions to identify fraudulent activity.

- Solution: Deploying a trained model integrated with transaction systems.

- Outcome:

- Reduced false positives by 40%.

- Improved detection rates, saving millions in fraud-related losses.

This demonstrates how effective model deployment can enhance security and operational efficiency.

Model Deployment vs. Traditional Software Deployment

| Aspect | Model Deployment | Traditional Software Deployment |

|---|---|---|

| Focus | Operationalizing machine learning models | Delivering functional software applications |

| Key Components | Monitoring, retraining, scaling | Testing, version control, rollbacks |

| Performance Monitoring | Continuous evaluation of model accuracy and drift | Standard application performance metrics |

| Adaptability | Requires regular updates for evolving data | Relatively static unless new features are added |

Model deployment is uniquely centered on maintaining and improving decision-making capabilities over time.

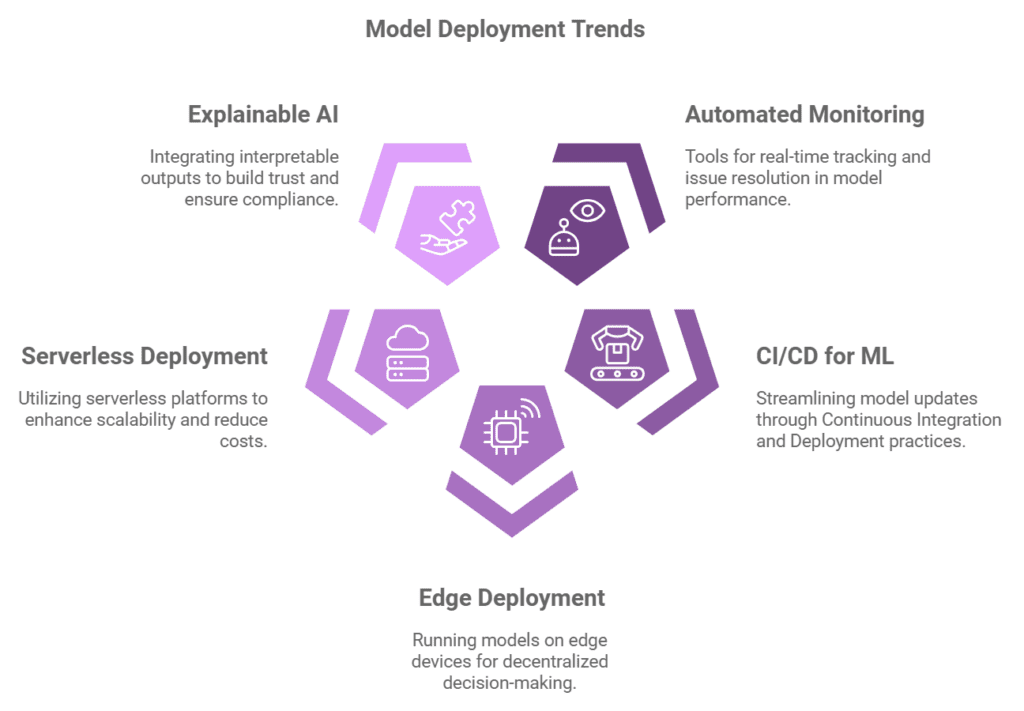

Future Trends in Model Deployment

The field of model deployment is rapidly evolving, with several emerging trends:

- Automated Monitoring Tools:

- Advanced tools for real-time performance tracking and automated issue resolution.

- CI/CD for ML Models:

- Incorporating Continuous Integration/Continuous Deployment practices to streamline updates.

- Edge Deployment:

- Running models on edge devices for faster, decentralized decision-making.

- Serverless Deployment:

- Leveraging serverless platforms to reduce costs and increase scalability.

- Explainable AI Integration:

- Ensuring deployed models provide interpretable outputs to enhance trust and compliance.

Best Practices for Effective Model Deployment

To ensure successful deployment, follow these best practices:

- Use Version Control:

- Track changes in model versions to ensure reproducibility and rollback capability.

- Implement Continuous Monitoring:

- Monitor performance metrics like accuracy, latency, and resource utilization.

- Select the Right Deployment Tools:

- Use tools like Docker, Kubernetes, or TensorFlow Serving for efficient model management.

- Collaborate Across Teams:

- Foster communication between data scientists, DevOps engineers, and IT teams.

- Document Deployment Workflows:

- Maintain clear documentation for processes, dependencies, and troubleshooting.

By adopting these practices, organizations can achieve seamless and efficient model deployment.

Conclusion: Unlocking the Value of Machine Learning with Model Deployment

Model Deployment is the bridge between machine learning development and real-world applications. By leveraging the right strategies and tools, organizations can operationalize their models, delivering timely and actionable insights.

For DevOps engineers, data scientists, and machine learning engineers, mastering model deployment is crucial for driving business success and maximizing the impact of AI solutions.