What is Learning Rate?

The learning rate is a critical optimization parameter in machine learning that determines the step size at each iteration during model training. It controls how much to adjust the model’s weights in response to the gradient of the loss function.

In simpler terms, the learning rate dictates how quickly a machine learning model “learns.” Setting it correctly is essential for achieving optimal performance and balancing between fast convergence and training stability.

Why is Learning Rate Important in Model Training?

In the context of model training, the learning rate directly influences:

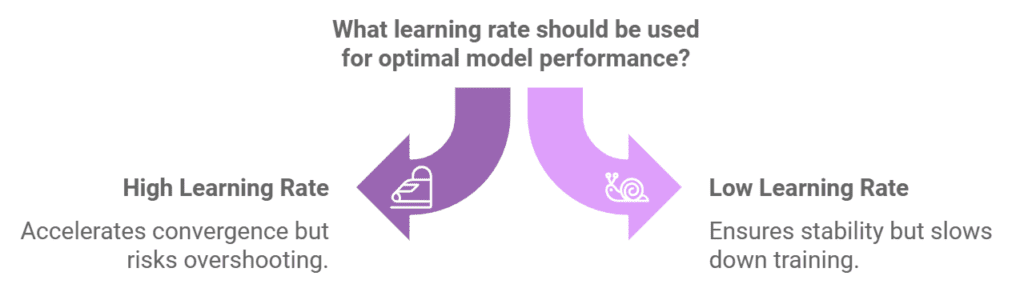

- Convergence Speed: A high learning rate might speed up convergence but risks overshooting the optimal solution. A low learning rate ensures stability but can make training sluggish.

- Optimization Efficiency: It determines how efficiently the model minimizes the loss function.

- Generalization: The learning rate impacts how well the model generalizes to unseen data, helping to avoid overfitting or underfitting.

By fine-tuning this training rate, data scientists and engineers can optimize the performance of their models.

How Does Learning Rate Function in Training?

The learning rate operates as part of optimization algorithms, such as Stochastic Gradient Descent (SGD), Adam, and RMSprop. Here’s a step-by-step overview of its role:

- Gradient Computation:

The algorithm calculates the gradient of the loss function with respect to the model’s weights. - Weight Update:

The learning rate scales the computed gradient, determining how much to adjust the weights. - Iteration:

This process repeats for each training step, guiding the model toward the optimal solution.

Dynamic Learning Rate Adjustments

Modern techniques for rate adjustment include:

- Learning Rate Decay: Gradually decreases the learning rate during training to improve stability.

- Adaptive Learning Rates: Algorithms like Adam dynamically adjust the learning rate based on past gradients.

Common Applications of Learning Rate in Machine Learning

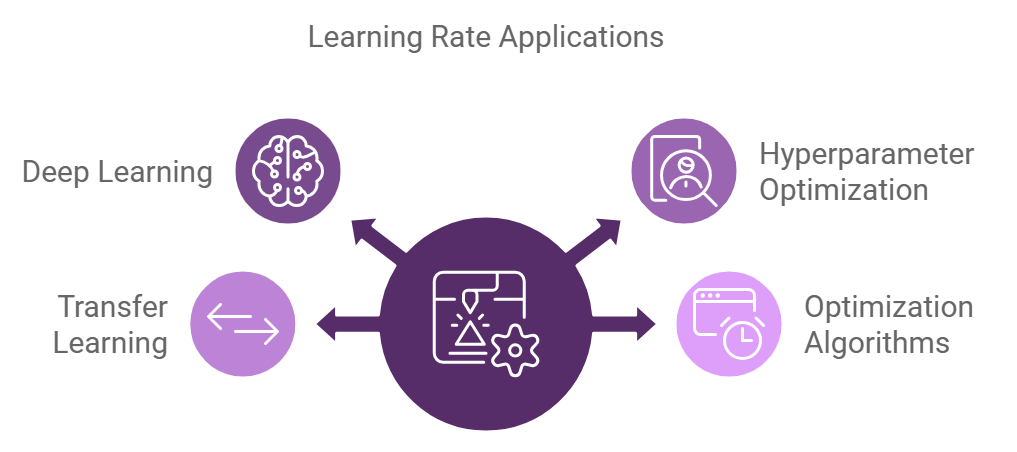

The learning parameters are fundamental to various machine learning workflows, including:

- Deep Learning:

Tuning the learning rate is crucial when training complex neural networks to ensure effective learning. - Hyperparameter Optimization:

Learning rate tuning is a common step in fine-tuning model performance. - Transfer Learning:

A lower learning rate is often used when fine-tuning pre-trained models on new datasets. - Optimization Algorithms:

Algorithms like SGD, Adam, and RMSprop rely on the learning rate to update model parameters.

Benefits of Adjusting Learning Parameters

Using the right learning parameters and adjusting them dynamically provides several advantages:

- Faster Convergence:

Optimizing the learning rate can significantly reduce the time needed for training. - Improved Model Accuracy:

A well-tuned learning rate ensures that the model converges to an optimal solution, minimizing loss effectively. - Efficient Resource Usage:

Faster convergence reduces computational costs and training time. - Reduced Overfitting:

Dynamic adjustments help prevent the model from overfitting by ensuring controlled weight updates. - Stability in Training:

Proper learning rate schedules stabilize the training process, avoiding oscillations.

Challenges of Learning Rate Tuning

Despite its importance, setting the learning rate comes with challenges:

- High Learning Rate:

- Risks overshooting the minimum of the loss function.

- May cause training instability and failure to converge.

- Low Learning Rate:

- Leads to excessively long training times.

- Risks getting stuck in local minima.

- Complexity of Dynamic Adjustment:

Implementing advanced techniques like learning rate decay or cyclical learning rates can be resource-intensive. - Data Dependency:

The optimal learning rate often varies depending on the dataset and model architecture.

Best Practices for Setting Learning Rates

To effectively set and adjust the learning rate, consider these best practices:

- Start with a Standard Value:

Begin with commonly used rates like 0.001 or 0.01 and adjust based on performance. - Use Learning Rate Schedules:

Techniques like step decay, exponential decay, or cosine annealing can dynamically lower the rate during training. - Experiment with Adaptive Methods:

Employ optimizers like Adam or RMSprop that adapt the learning rate during training. - Visualize Training Progress:

Plot the loss curve to observe the impact of learning rate changes on convergence. - Leverage Grid Search:

Test multiple learning rate values to identify the best one for your model.

Real-Life Example: Learning Rate in Action

A notable example comes from Google’s TensorFlow team, where dynamic learning rates were employed to train image recognition models. By using an adaptive learning rate schedule, they achieved:

- Faster Convergence: Training times were reduced by 30%.

- Higher Accuracy: Final model accuracy improved by 15%.

This demonstrates the critical role of learning rates in optimizing machine learning workflows.

Learning Rate vs. Related Concepts

The learning rate is distinct but interrelated with other hyperparameters in machine learning:

| Concept | Definition | Relation to Learning Rate |

|---|---|---|

| Batch Size | Number of samples processed before weight updates. | Smaller batch sizes may require lower learning rates. |

| Epoch | One complete pass through the training dataset. | Learning rate influences the speed of convergence. |

| Momentum | Adds velocity to weight updates for faster convergence. | Used alongside learning rate to improve optimization. |

Understanding these interactions is crucial for successful model training.

Future Trends in Learning Rate Optimization

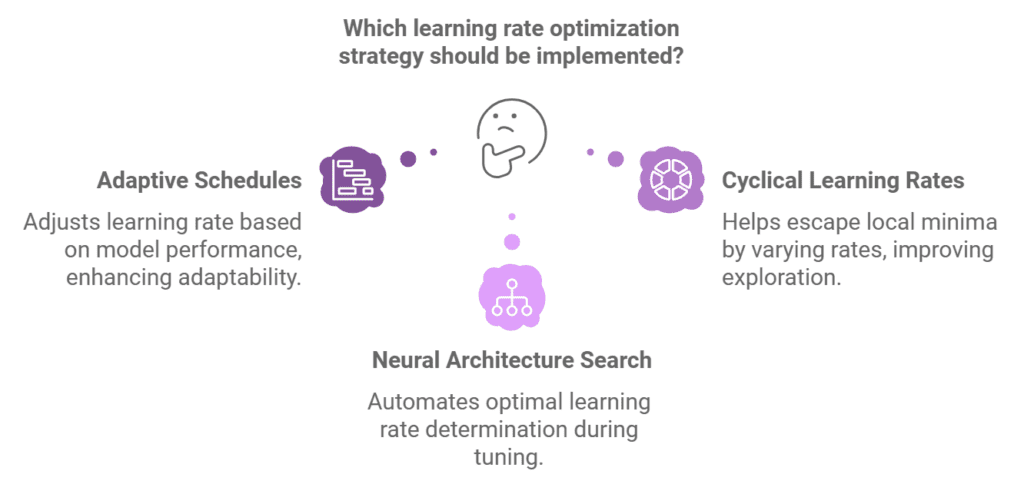

The future of learning rate optimization is shaped by advancements in machine learning. Emerging trends include:

- Adaptive Schedules:

Algorithms that autonomously adjust the learning rate based on real-time model performance. - Cyclical Learning Rates:

Periodically increasing and decreasing rates to escape local minima and improve exploration. - Neural Architecture Search:

Automated processes for determining optimal learning rates as part of hyperparameter tuning.

Conclusion: Mastering the Learning Rate for Success

The learning rate is a cornerstone of model training and optimization. For machine learning engineers, data scientists, and AI developers, understanding and mastering this hyperparameter is essential for building efficient and accurate models.

By adopting best practices, leveraging advanced techniques, and staying updated with future trends, professionals can harness the full potential of the learning rate to achieve faster, more reliable, and resource-efficient training.