What is Feature Selection?

Feature selection is the process of identifying and selecting important variables to improve model performance. It helps in refining analysis by choosing relevant features, which can enhance accuracy, reduce overfitting, and decrease computational cost. Key methods include filter, wrapper, and embedded techniques.

How does Feature Selection Operate in Data Analysis?

Feature selection is a crucial process in machine learning and data analysis that involves selecting a subset of relevant features (variables) for model building. It helps improve model performance by identifying the most significant variables that contribute to the predictive power of the model. Here’s how it operates:

- Identify Important Variables: Feature selection techniques assess the importance of each feature based on statistical tests, correlation analysis, or model-based methods.

- Reduce Overfitting: By eliminating irrelevant or redundant features, feature selection helps in reducing overfitting, allowing the model to generalize better to unseen data.

- Improve Model Interpretability: A smaller set of features makes the model easier to understand and interpret, which is critical in fields like healthcare or finance.

- Enhance Computational Efficiency: Fewer features lead to shorter training times and lower computational costs, which is especially important with large datasets.

- Key Methods: Popular methods include filter methods (e.g., correlation coefficient), wrapper methods (e.g., forward selection), and embedded methods (e.g., LASSO regression).

In summary, feature selection not only boosts model accuracy but also simplifies the analysis process, making it a vital step in effective data science workflows.

Common Uses and Applications of Feature Selection

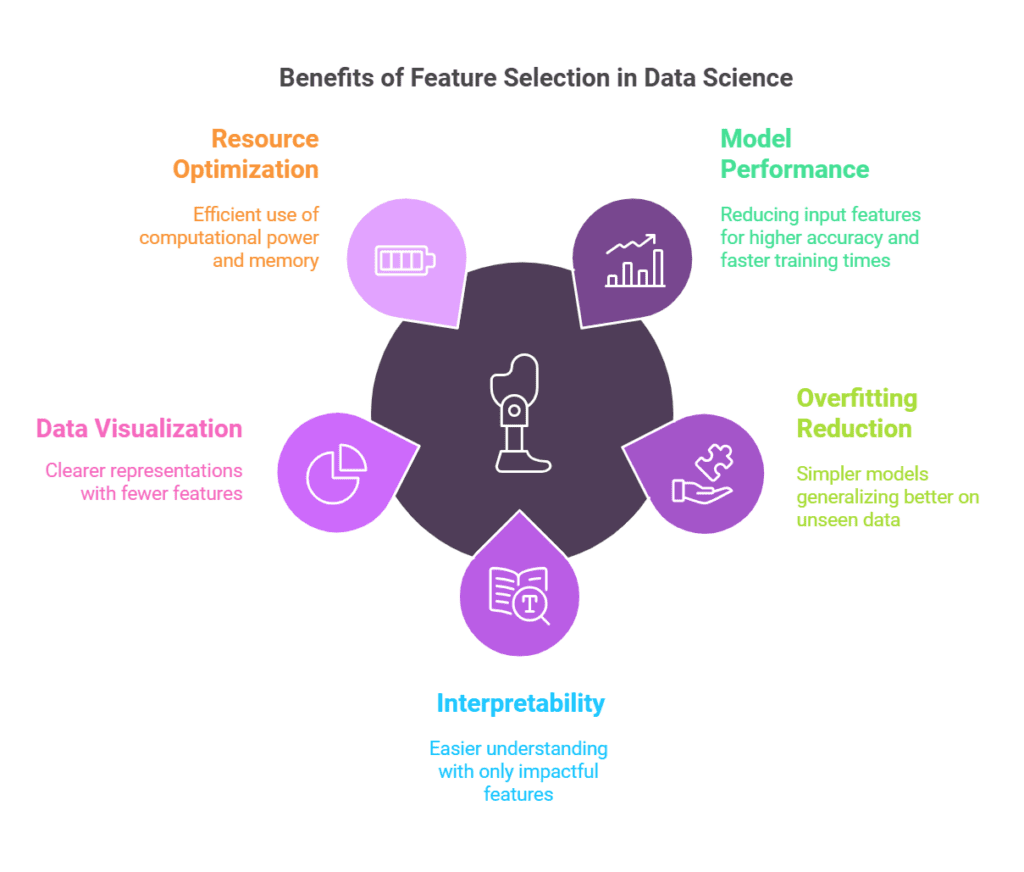

Feature selection is a crucial process in the realm of data science and machine learning, aiding in the identification of important variables that enhance model performance. By selecting relevant features, analysts can improve the accuracy and efficiency of their models. Here are some key applications of feature selection:

- Improving Model Performance: By reducing the number of input features, models can achieve higher accuracy and faster training times.

- Reducing Overfitting: Fewer irrelevant features lead to simpler models that generalize better on unseen data.

- Enhancing Interpretability: Selecting only the most impactful features makes models easier to understand and interpret.

- Facilitating Data Visualization: A reduced set of features allows for clearer visual representations of data and results.

- Optimizing Resource Usage: Less computational power and memory are required, making the analysis more efficient.

What are the Advantages of Feature Selection?

Feature Selection is a crucial process in data science and machine learning that enhances model performance by identifying the most relevant variables. Implementing effective feature selection techniques can yield several benefits:

- Improved Model Accuracy: Selecting the right features enhances predictive performance.

- Reduced Overfitting: By eliminating irrelevant features, models become simpler and more generalizable.

- Decreased Training Time: Fewer features lead to faster training and evaluation times.

- Enhanced Interpretability: Models with selected features are easier to understand and explain.

- Better Visualization: Fewer dimensions simplify data visualization and insights.

Key methods for effective feature selection include filtering, wrapper methods, and embedded techniques, each serving to refine your data analysis for optimal results.

Are there any Drawbacks or Limitations Associated with Feature Selection?

While Feature Selection offers many benefits, it also has limitations such as:

- Overfitting: Selecting too few features might lead to a model that doesn’t generalize well.

- Information Loss: Important information may be discarded if relevant features are overlooked.

- Time Consumption: Some methods can be computationally intensive and time-consuming.

These challenges can impact the model’s performance and accuracy, leading to potential misinterpretations of results.

Can You Provide Real-life Examples of Feature Selection in Action?

For example, Feature Selection is used by healthcare analytics companies to improve patient outcome predictions. By selecting only the most relevant patient data, these companies can build models that accurately predict hospital readmissions. This demonstrates how Feature Selection can lead to better healthcare decisions and resource allocation.

How does Feature Selection Compare to Similar Concepts or Technologies?

Compared to Dimensionality Reduction, Feature Selection differs in that it focuses on selecting a subset of original features, while Dimensionality Reduction transforms features into a lower-dimensional space. While Dimensionality Reduction focuses on compressing data, Feature Selection is more effective for improving model interpretability by retaining only the most relevant variables.

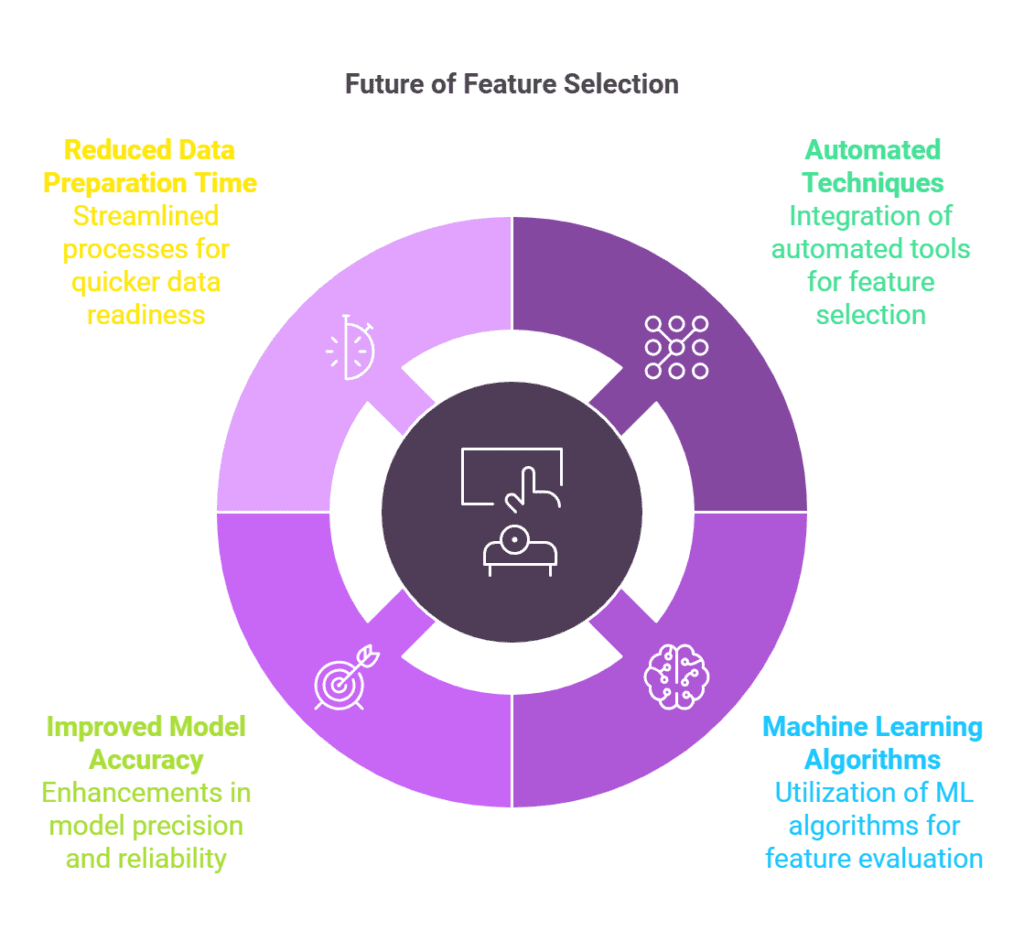

What are the Expected Future Trends for Feature Selection?

In the future, Feature Selection is expected to evolve by integrating automated techniques and machine learning algorithms. These changes could lead to more sophisticated feature evaluation methods, improving model accuracy and reducing the time required for data preparation.

What are the Best Practices for Using Feature Selection Effectively?

To use Feature Selection effectively, it is recommended to:

- Understand your data: Conduct exploratory data analysis to grasp the significance of features.

- Utilize multiple methods: Combine different feature selection techniques for robust results.

- Validate your choices: Use cross-validation to ensure selected features improve model performance.

Following these guidelines ensures that the selected features contribute positively to the model’s predictive capabilities.

Are there Detailed Case Studies Demonstrating the Successful Implementation of Feature Selection?

One case study highlights a financial institution that implemented Feature Selection to improve its credit scoring model. By selecting relevant variables, the institution achieved a 20% increase in prediction accuracy on default risk. This implementation not only enhanced the model’s performance but also provided clearer insights into risk factors affecting borrowers.

What Related Terms are Important to Understand along with Feature Selection?

Related Terms: Related terms include Feature Engineering and Model Evaluation, which are crucial for understanding Feature Selection because Feature Engineering involves creating new features from existing data, and Model Evaluation assesses the effectiveness of the selected features in improving model performance.

What are the Step-by-step Instructions for Implementing Feature Selection?

To implement Feature Selection, follow these steps:

- Collect and preprocess your data to prepare it for analysis.

- Perform exploratory data analysis to identify potential features.

- Select appropriate feature selection methods based on your data type and model.

- Apply the selected methods and evaluate the results against model performance metrics.

- Iterate and refine your feature set based on validation results.

These steps ensure a systematic approach to identifying the most impactful features for your model.

Frequently Asked Questions

Q: What is feature selection?

A: Feature selection is the process of identifying and selecting a subset of relevant features for use in model construction.

1: It helps in reducing the dimensionality of data,

2: It improves model performance by focusing on important variables.

Q: Why is feature selection important for data analysis?

A: Feature selection is important because it can significantly impact the quality of predictive models.

1: It reduces overfitting by eliminating irrelevant features,

2: It improves model interpretability by highlighting key variables.

Q: What are the benefits of selecting relevant features?

A: Selecting relevant features offers several benefits.

1: It minimizes computational costs by reducing the number of features,

2: It enhances model accuracy by focusing on the most impactful data.

Q: What methods are commonly used for feature selection?

A: There are several methods for feature selection.

1: Filter methods evaluate features based on statistical tests,

2: Wrapper methods use a predictive model to assess feature subsets.

Q: How does feature selection impact model performance?

A: Feature selection can greatly impact model performance.

1: It can lead to faster training times,

2: It often results in better generalization on unseen data.

Q: When should feature selection be performed?

A: Feature selection should be performed during the data preprocessing stage.

1: It is typically done after data cleaning,

2: It should be revisited when new features are added or when the model changes.

Q: Can feature selection be automated?

A: Yes, feature selection can be automated using various algorithms.

1: Algorithms like Recursive Feature Elimination (RFE) can streamline the process,

2: Automated feature selection tools can save time and improve accuracy.