What are Decision Trees?

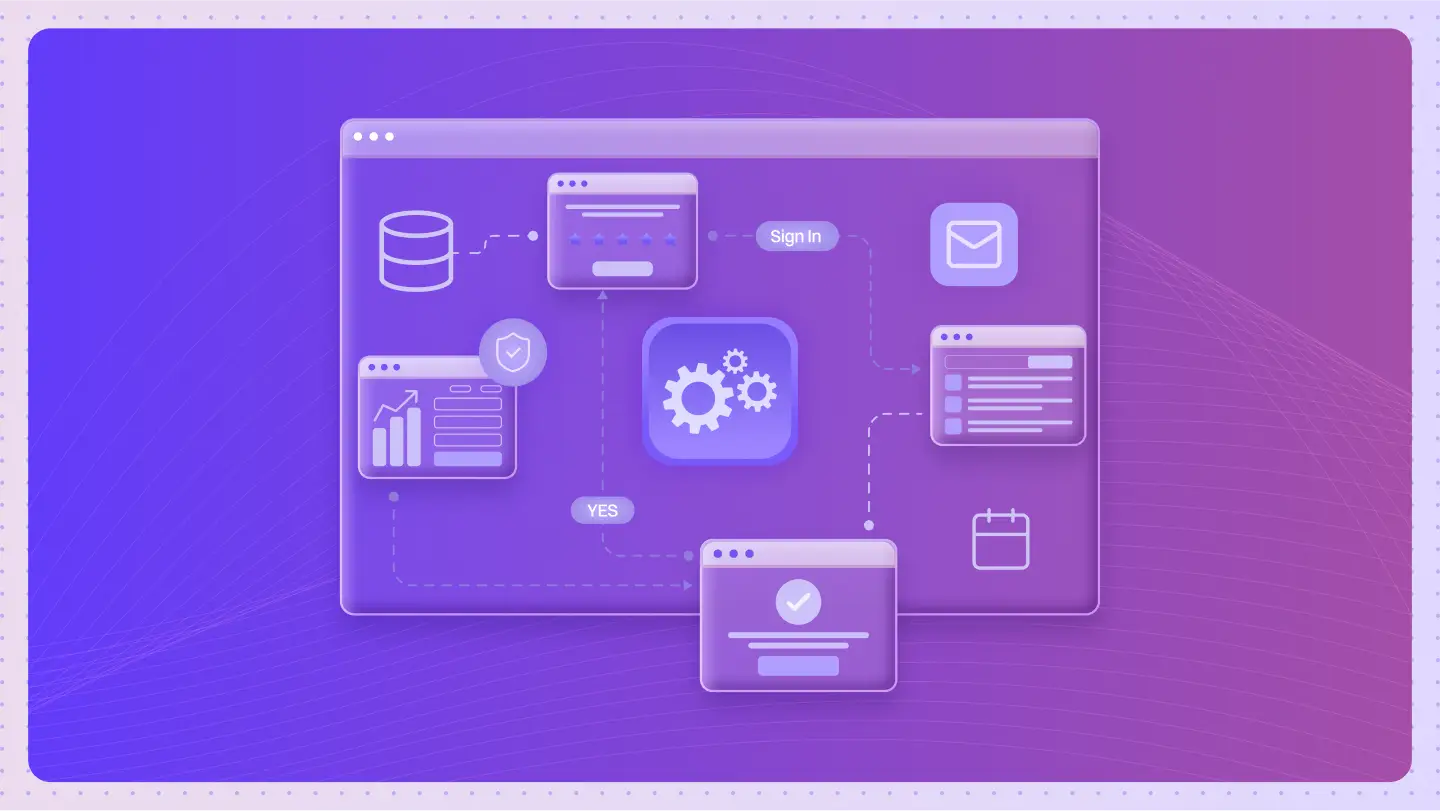

Decision trees are a graphical representation used to make decisions based on data. They assist in both classification and regression tasks by breaking down complex decisions into simpler, manageable steps.

How do Decision Trees Operate in Data Analysis?

Decision Trees are powerful tools used in both classification and regression tasks. They function by splitting data into subsets based on the value of input features, effectively creating a tree-like model of decisions. Here’s how they operate:

- Node Creation: Each node represents a feature or attribute, and the branches split based on decision rules.

- Splitting Criteria: The algorithm selects the best feature to split the data, often using metrics like Gini impurity or entropy.

- Leaf Nodes: The process continues until a stopping condition is met, producing leaf nodes that represent final outcomes or predictions.

- Pruning: To avoid overfitting, unnecessary branches are removed, enhancing model generalization.

Benefits of using Decision Trees include their interpretability, allowing users to understand the model’s decision-making process easily. They handle both numerical and categorical data well and can be visualized for better insights. Key aspects of building effective Decision Trees involve selecting the right features, determining proper stopping criteria, and employing techniques like cross-validation to validate model performance.

Common Uses and Applications of Decision Trees

Decision Trees are widely utilized in various industries for their effectiveness in decision-making. They are particularly beneficial in classification and regression tasks, allowing data scientists and machine learning engineers to interpret complex datasets easily. Here are some main applications:

- Healthcare: Assists in diagnosing diseases by analyzing patient data, leading to better treatment plans.

- Finance: Used for credit scoring and risk assessment, enabling banks to make informed lending decisions.

- Marketing: Businesses leverage Decision Trees for customer segmentation and targeting, optimizing marketing strategies.

- Retail: Aids in inventory management by predicting sales trends based on historical data.

- Manufacturing: Optimizes production processes by analyzing factors that affect operational efficiency.

- Telecommunications: Identifies customer churn by analyzing usage patterns and predicting cancellations.

- Insurance: Used for underwriting processes and claims analysis, enhancing risk management strategies.

What are the Advantages of Using Decision Trees?

Decision Trees offer numerous benefits for classification and regression tasks. Their visual representation makes them easy to understand and interpret. Here are some key advantages of implementing Decision Trees:

- Simplicity and Interpretability: Provides a clear and intuitive model structure, making it straightforward for stakeholders to understand the decision-making process.

- Versatility: Applicable for both classification and regression tasks, making them a flexible choice for data analysis.

- Handling Non-linearity: Effectively models complex relationships in data, accommodating non-linear patterns without needing transformations.

- Feature Importance: Identifies significant features in the dataset, aiding in feature selection and enhancing model performance.

- Minimal Data Preparation: Requires less data preprocessing compared to other algorithms, allowing for faster implementation.

- Robustness to Outliers: Less sensitive to outliers, leading to more stable predictions.

Are there any Drawbacks or Limitations Associated with Decision Trees?

While Decision Trees offer many benefits, they also have limitations:

- Prone to overfitting, especially with complex datasets.

- Sensitive to small changes in data, which can lead to different tree structures.

- Limited predictive power compared to ensemble methods.

These challenges can impact the model’s accuracy and generalizability, particularly when applied to unseen data.

Can You Provide Real-Life Examples of Decision Trees in Action?

For example, healthcare organizations use Decision Trees to predict patient outcomes based on medical history and treatment plans. This demonstrates how they can simplify complex decision-making processes and improve resource allocation.

How do Decision Trees Compare to Similar Concepts or Technologies?

Compared to Random Forests, Decision Trees differ in their simplicity and interpretability. While Random Forests focus on ensemble learning to improve accuracy, Decision Trees allow for clearer visual representation and straightforward decision-making.

What are the Expected Future Trends for Decision Trees?

In the future, Decision Trees are expected to evolve by integrating with AI and machine learning frameworks that enhance their predictive capabilities. These changes could lead to better model performance and user-friendly tools for data interpretation.

What are the Best Practices for Using Decision Trees Effectively?

To use Decision Trees effectively, it is recommended to:

- Prune the tree to avoid overfitting.

- Use cross-validation for better model evaluation.

- Ensure data is pre-processed for quality and relevance.

Following these guidelines ensures more reliable predictions and a clearer understanding of decision pathways.

Are there Detailed Case Studies Demonstrating the Successful Implementation of Decision Trees?

One case study involves a retail company that implemented Decision Trees to optimize inventory management. By analyzing purchase patterns, they achieved a 20% reduction in stockouts and improved customer satisfaction, illustrating the practical impact of Decision Trees in real-world applications.

What Related Terms are Important to Understand along with Decision Trees?

Related terms include ‘Random Forests’ and ‘Overfitting‘, which are crucial for understanding Decision Trees. These provide context on model complexity and performance, helping users make informed decisions regarding algorithm selection.

What are the Step-by-step Instructions for Implementing Decision Trees?

To implement Decision Trees, follow these steps:

- Gather and preprocess your dataset for quality.

- Split the data into training and testing sets.

- Choose the appropriate features for the model.

- Train the Decision Tree using a suitable algorithm.

- Evaluate the model’s performance with metrics like accuracy and precision.

These steps ensure a structured approach to building a robust Decision Tree model.

Frequently Asked Questions

What are Decision Trees?

Decision Trees are flowchart-like structures used for decision-making. They help classify data into categories and can predict numerical outcomes.

How Do Decision Trees Aid in Classification?

Decision Trees split data based on feature values. Each branch represents a decision rule, and leaves represent the final classification.

What is the Benefit of Using Decision Trees for Regression?

Decision Trees can model relationships between variables. They predict continuous values and provide intuitive visualizations of data.

Why Are Decision Trees Useful for Interpretation?

They provide clear visual representations of decisions, allowing users to easily understand the logic behind predictions and highlighting important features impacting decisions.

What are Key Aspects of Building Effective Decision Trees?

Key aspects include selecting the right features and tree depth. Pruning helps reduce overfitting, and splitting criteria should be well-defined for accuracy.

How Do Decision Trees Handle Missing Values?

Decision Trees can manage missing values through various methods. They can ignore missing data during splits or use imputation techniques.

Can Decision Trees Be Combined with Other Models?

Yes, Decision Trees can be part of ensemble methods, improving accuracy when combined with other models. Popular methods include random forests and boosting.