What is Contextual Embeddings?

Contextual embeddings are representations of words that consider the surrounding context, enhancing semantic understanding in NLP models. They improve language tasks by generating context-aware embeddings that capture nuanced meanings and relationships.

How do Contextual Embeddings Operate in NLP?

Contextual embeddings enhance the representation of words by considering their context. Unlike traditional word embeddings that assign a fixed vector to each word, contextual embeddings dynamically generate vectors based on surrounding words. This adaptability allows NLP models to capture nuanced meanings and improve semantic understanding.

Key functionalities include:

- Dynamic Representation: Each word’s vector changes depending on its context, allowing for a more accurate representation.

- Improved Semantic Understanding: By analyzing context, models can better grasp homonyms and polysemous words.

- Context-Aware Models: Models like BERT and ELMo utilize these embeddings for tasks like sentiment analysis and question answering.

- Enhanced Performance: Contextual embeddings lead to better accuracy in language tasks, outperforming traditional methods.

- Techniques for Generation: Key methods include attention mechanisms and transformer architectures, which facilitate effective word representation generation.

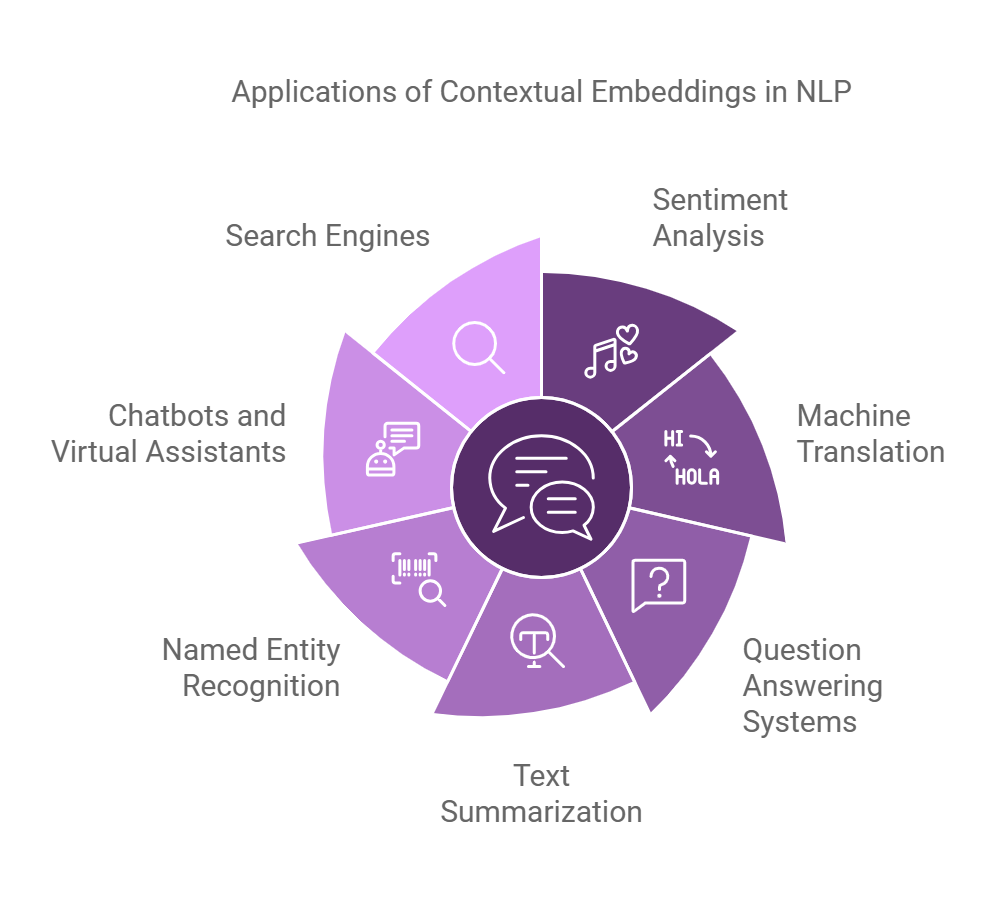

Common Uses and Applications of Contextual Embeddings

Contextual embeddings play a pivotal role in enhancing natural language processing by providing nuanced understandings of word meanings based on context. They are widely used in various applications, including:

- Sentiment Analysis: Contextual embeddings allow models to detect sentiment shifts in text, improving accuracy in sentiment classification tasks.

- Machine Translation: By capturing context, these embeddings enhance translation quality by preserving meaning across languages.

- Question Answering Systems: They improve the relevance of responses by understanding the context of both questions and answers.

- Text Summarization: Context-aware representations help in generating concise summaries by focusing on essential information.

- Named Entity Recognition: Contextual embeddings enhance the ability to identify entities in varied contexts, increasing recognition accuracy.

- Chatbots and Virtual Assistants: They allow for more natural interactions by understanding user intent based on context.

- Search Engines: Contextual embeddings improve search result relevance by understanding user queries more deeply.

What are the Advantages of Contextual Embeddings?

Contextual embeddings are revolutionizing the field of Natural Language Processing (NLP) by enhancing semantic understanding. Here are some key benefits of using context-aware embeddings:

- Improved Semantic Understanding: They capture the meaning of words based on their context, leading to more accurate interpretations.

- Dynamic Representations: Unlike static embeddings, contextual embeddings adapt to different contexts, providing more nuanced meanings.

- Enhanced Language Tasks: They significantly boost performance in various language tasks such as:

- Sentiment analysis

- Named entity recognition

- Machine translation

- Reduced Ambiguity: By considering surrounding words, they minimize ambiguity in word meanings.

- State-of-the-Art Techniques: They leverage advanced techniques like transformers and attention mechanisms for effective representation generation.

- Better Generalization: Contextual embeddings improve model generalization across diverse datasets and tasks.

Are there any Drawbacks or Limitations Associated with Contextual Embeddings?

While Contextual Embeddings offer many benefits, they also have limitations such as computational complexity, increased resource requirements, and the need for large datasets. These challenges can impact training times and may necessitate more powerful hardware.

Can You Provide Real-Life Examples of Contextual Embeddings in Action?

For example, Google’s BERT model uses Contextual Embeddings to improve search results by understanding the context of words in queries. This demonstrates how context-aware embeddings can lead to more accurate interpretations of user intent.

How do Contextual Embeddings Compare to Similar Concepts or Technologies?

Compared to traditional word embeddings like Word2Vec, Contextual Embeddings differ in their ability to understand word meanings based on context. While Word2Vec focuses on static representations, Contextual Embeddings adjust based on surrounding words, making them more suitable for nuanced language tasks.

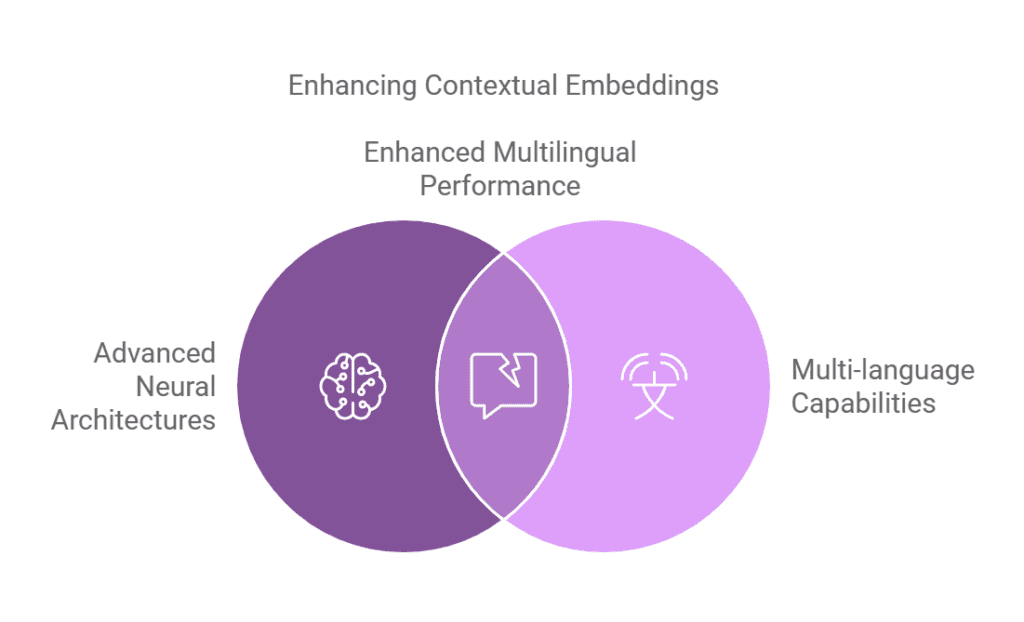

What are the Expected Future Trends for Contextual Embeddings?

In the future, Contextual Embeddings are expected to evolve by incorporating more advanced neural architectures and enhancing multi-language capabilities. These changes could lead to better performance in multilingual tasks and improved understanding of complex sentence structures.

What are the Best Practices for Using Contextual Embeddings Effectively?

To use Contextual Embeddings effectively, it is recommended to:

- Choose the right model based on your specific task.

- Fine-tune the embeddings on your dataset.

- Monitor performance metrics closely during training.

Following these guidelines ensures improved model accuracy and relevance.

Are there Detailed Case Studies Demonstrating the Successful Implementation of Contextual Embeddings?

One notable case study involved a financial services company that implemented Contextual Embeddings for sentiment analysis. By using these embeddings, they achieved a 30% increase in the accuracy of sentiment detection, enabling better decision-making based on customer feedback.

What Related Terms are Important to Understand along with Contextual Embeddings?

Related terms include Word Embeddings and Transfer Learning, which are crucial for understanding Contextual Embeddings because they lay the foundation for how words can be represented and adjusted based on different contexts.

What are the Step-by-step Instructions for Implementing Contextual Embeddings?

To implement Contextual Embeddings, follow these steps:

- Select a pre-trained model suitable for your application.

- Prepare your dataset for fine-tuning.

- Fine-tune the model on your specific data.

- Evaluate the model’s performance using relevant metrics.

- Deploy the model for real-world application.

These steps ensure a structured approach to integrating Contextual Embeddings into your projects.

Frequently Asked Questions

What Are Contextual Embeddings?

Contextual embeddings are representations of words that take into account the context in which they appear.

- They capture semantic meaning based on surrounding words.

- They adapt to different usages of the same word.

How Do Contextual Embeddings Improve Semantic Understanding?

They provide richer word representations that consider context.

- This leads to better understanding of word meanings.

- They help in distinguishing between different senses of a word.

What Are the Benefits of Using Context-Aware Embeddings?

They offer more accurate representations for language tasks.

- Improved performance in tasks like sentiment analysis.

- Better handling of polysemy and homonymy.

What Techniques Are Used to Generate Contextual Embeddings?

Common techniques include models like BERT and ELMo.

- These models use deep learning to generate embeddings.

- They utilize attention mechanisms to capture context.

Can Contextual Embeddings Be Used in Transfer Learning?

Yes, they are highly useful in transfer learning scenarios.

- Pre-trained embeddings can be fine-tuned on specific tasks.

- This leads to improved performance with less data.

What Types of NLP Tasks Benefit from Contextual Embeddings?

Many NLP tasks benefit from these embeddings.

- Tasks like named entity recognition and machine translation.

- They also improve performance in question answering systems.

Are There Any Limitations to Using Contextual Embeddings?

There are some challenges to consider.

- They require significant computational resources.

- They can sometimes lead to overfitting on small datasets.