What is BERT?

BERT, or Bidirectional Encoder Representations from Transformers, is a model designed to improve language understanding in NLP tasks by considering context from both directions. Its key applications include question answering and sentiment analysis, making it a powerful tool for developers.

How does the BERT Model Operate in NLP Tasks?

BERT, or Bidirectional Encoder Representations from Transformers, revolutionizes natural language processing (NLP) through its unique architecture and training methodology. It processes text bidirectionally, allowing it to capture context from both left and right simultaneously. This enhances language understanding significantly compared to traditional models.

Key functionalities of BERT include:

- Contextual Understanding: BERT uses attention mechanisms to focus on relevant words in a sentence, improving the model’s ability to understand nuances.

- Masked Language Model (MLM): By randomly masking words during training, BERT learns to predict these words based on context, which aids in understanding semantic relationships.

- Next Sentence Prediction (NSP): BERT predicts whether two sentences follow each other logically, enhancing tasks like question answering and language inference.

Benefits of using BERT include:

- Bidirectional Representation: Captures context from both directions, making it more effective for understanding complex sentences.

- Fine-tuning Capability: BERT can easily be fine-tuned for specific tasks, making it versatile for applications like sentiment analysis, named entity recognition, and more.

- High Performance: BERT consistently outperforms previous models on various NLP benchmarks, establishing itself as a state-of-the-art solution.

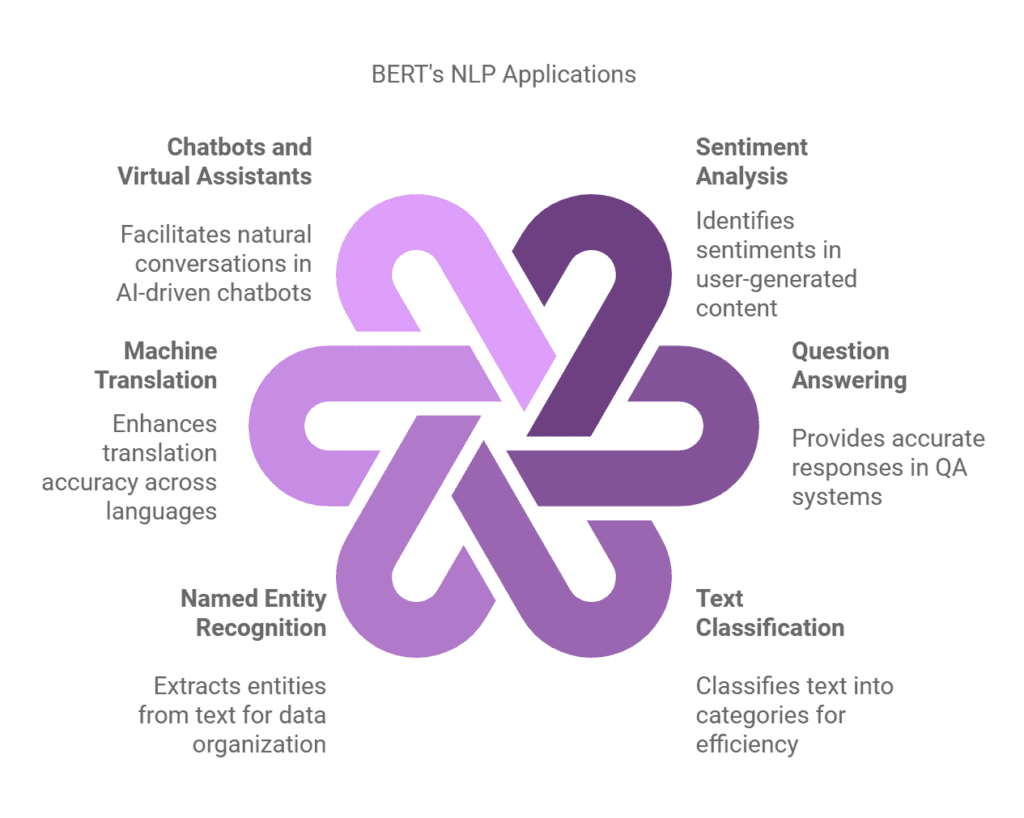

Common Uses and Applications of BERT in Real-world Scenarios

BERT (Bidirectional Encoder Representations from Transformers) has transformed the landscape of Natural Language Processing (NLP) with its innovative approach to understanding context in language. Its main applications include:

- Sentiment Analysis: BERT excels in identifying sentiments in user-generated content, enabling businesses to better understand customer opinions.

- Question Answering: BERT’s ability to comprehend context allows for more accurate responses in QA systems, enhancing user experience in various applications.

- Text Classification: Organizations leverage BERT for classifying text into categories, improving the efficiency of content management systems.

- Named Entity Recognition: BERT improves the extraction of entities from text, aiding in data organization and retrieval tasks.

- Machine Translation: By understanding the nuances of language, BERT enhances translation accuracy across multiple languages.

- Chatbots and Virtual Assistants: BERT’s contextual understanding allows for more natural conversations, improving user interaction in AI-driven chatbots.

What are the Advantages of Using BERT Models?

BERT (Bidirectional Encoder Representations from Transformers) revolutionizes the field of natural language processing (NLP) with its unique capabilities. The following benefits make BERT an invaluable tool for NLP specialists, AI researchers, and language model developers:

- Bidirectional Context Understanding: BERT processes words in relation to all the other words in a sentence, capturing context more effectively.

- Improved Accuracy: It significantly enhances accuracy in tasks such as sentiment analysis, question answering, and language translation.

- Versatile Applications: BERT can be fine-tuned for a wide array of tasks, including:

- Text classification

- Named entity recognition

- Language inference

- Pre-trained Models: BERT offers pre-trained models, saving time and resources for developers.

- State-of-the-Art Performance: It has set new benchmarks on various NLP challenges, showcasing its effectiveness.

- Enhanced Transfer Learning: BERT’s architecture promotes better transfer learning, allowing models to generalize across different tasks.

By leveraging BERT, organizations can achieve superior language understanding, making it a cornerstone technology in NLP advancements.

Are there any Drawbacks or Limitations Associated with BERT?

While BERT offers many benefits, it also has limitations such as:

- High computational requirements for training and inference.

- Long training times that may not be feasible for all organizations.

- Complexity in fine-tuning for specific tasks.

These challenges can impact deployment speed and operational costs for businesses.

Can You Provide Real-life Examples of BERT in Action?

For example, BERT is used by Google to improve search result relevance. By understanding query context better, it demonstrates how large language models can refine search algorithms and enhance user experience in retrieving information.

How does BERT Compare to Similar Concepts or Technologies?

Compared to traditional language models, BERT differs in its bidirectional approach. While traditional models focus on processing text in one direction, BERT analyzes context from both sides of a word. This makes BERT more effective for understanding nuances in language.

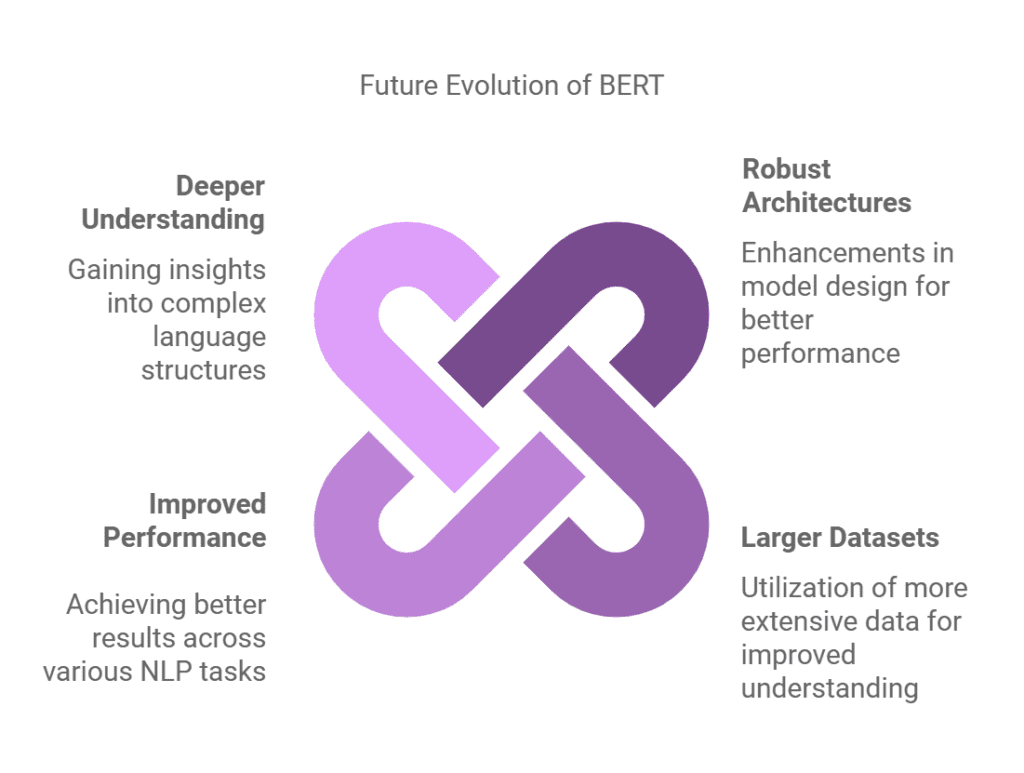

What are the Expected Future Trends for BERT?

In the future, BERT is expected to evolve by incorporating more robust architectures and larger datasets. These changes could lead to improved performance on a wider range of NLP tasks and a deeper understanding of complex language structures.

What are the Best Practices for Using BERT Effectively?

To use BERT effectively, it is recommended to:

- Fine-tune the model on task-specific datasets.

- Utilize appropriate tokenization techniques.

- Monitor performance metrics closely during training.

Following these guidelines ensures better model accuracy and relevance in applications.

Are there Detailed Case Studies Demonstrating the Successful Implementation of BERT?

One notable case study is from Google AI, where BERT was implemented in their search engine. The key outcomes included:

- Improved understanding of natural language queries.

- Increased user satisfaction due to more relevant search results.

This implementation showcased how BERT can effectively address real-world challenges in information retrieval.

What Related Terms are Important to Understand along with BERT?

Related terms include ‘Transformers’ and ‘Natural Language Processing (NLP)’, which are crucial for understanding BERT because they provide context on the underlying architecture and the broader field in which BERT operates.

What are the Step-by-step Instructions for Implementing BERT?

To implement BERT, follow these steps:

- Choose a pre-trained BERT model.

- Fine-tune the model on your specific dataset.

- Evaluate model performance using relevant metrics.

- Deploy the model for your application.

These steps ensure proper integration and utilization of BERT in your NLP projects.

Frequently Asked Questions

What is BERT?

BERT stands for Bidirectional Encoder Representations from Transformers. It is a model designed for natural language processing, enabling better understanding of context in language.

How does BERT improve language understanding?

BERT uses a bidirectional approach to read text. It considers both left and right context, which helps in grasping the full meaning of words.

What are the main benefits of using BERT?

BERT provides better context and meaning in language tasks. It allows for more accurate predictions, improving performance in various NLP applications.

What types of NLP tasks can BERT be applied to?

BERT can be utilized for a variety of tasks. Examples include sentiment analysis, question answering, as well as named entity recognition.

What features make BERT unique?

BERT’s architecture is distinctive. It employs transformers for processing, and it is pre-trained on vast amounts of text data.

How does BERT handle different languages?

BERT is capable of understanding multiple languages. It has multilingual versions, which can be fine-tuned for specific language tasks.

What is the significance of BERT in AI research?

BERT has influenced AI research significantly. It sets new benchmarks for various NLP tasks, encouraging further advancements in language models.