What is Attention Mechanism?

An Attention Mechanism is a technique in neural networks that allows models to focus on specific parts of input data, enhancing performance by prioritizing relevant information. This approach benefits AI applications by improving context understanding and decision-making.

How does the Attention Mechanism Operate or Function?

The Attention Mechanism in neural networks functions by enabling models to focus on specific parts of the input data, thereby enhancing the processing of information. This technique improves model performance by allowing it to weigh the importance of different elements in the input sequence.

Here are the key functionalities of the Attention Mechanism:

- Dynamic Focus: It dynamically adjusts the focus on various input components, which helps in understanding context better.

- Contextual Awareness: By incorporating contextual information, the mechanism aids in reducing ambiguity in the data.

- Improved Performance: Attention layers facilitate better learning, enabling the model to generalize more effectively from training data.

- Scalability: It allows for easier scaling to larger datasets by managing dependencies within the input.

- Enhanced Interpretability: Attention scores provide insights into the model’s decision-making process, making it more interpretable.

Overall, the Attention Mechanism is pivotal in advancing the capabilities of AI applications, particularly in natural language processing and computer vision.

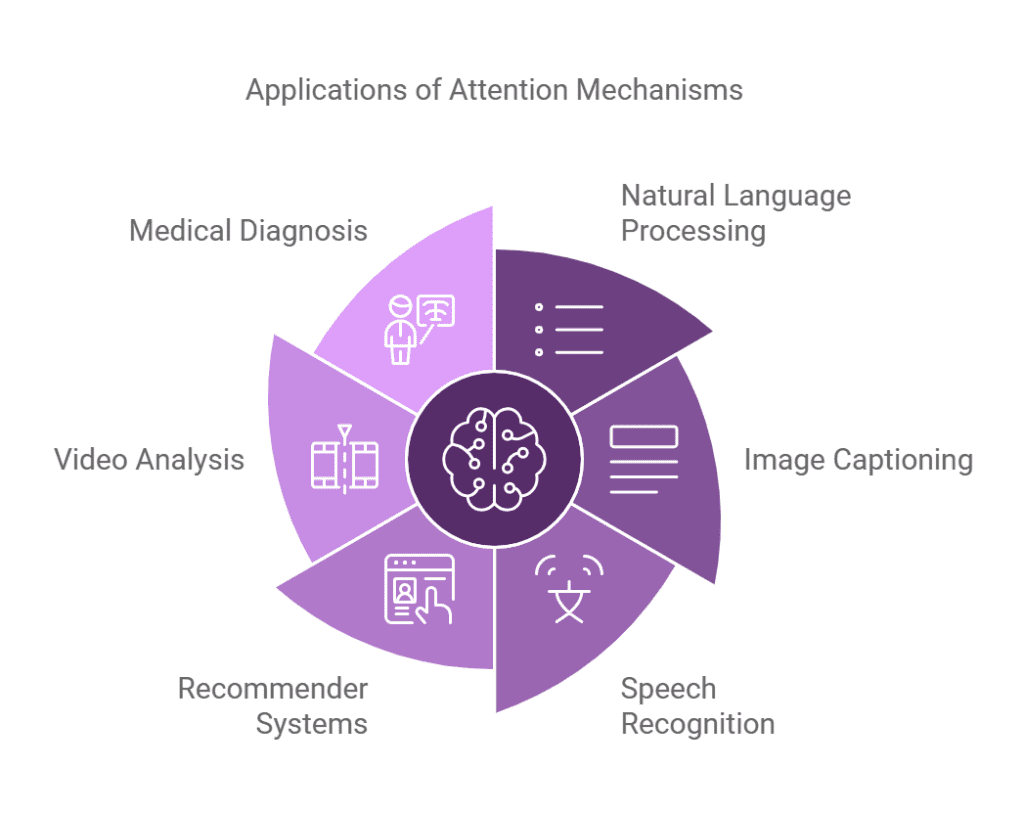

Common Uses and Applications of Attention Mechanism

Attention Mechanisms are pivotal in various AI and machine learning applications, enhancing model performance and enabling more sophisticated data processing. Here are some key applications:

- Natural Language Processing (NLP): Used in translation, summarization, and sentiment analysis, attention allows models to focus on relevant parts of text, improving accuracy and context understanding.

- Image Captioning: Attention mechanisms help neural networks focus on specific areas of an image, generating more accurate and contextually relevant captions.

- Speech Recognition: By emphasizing certain audio features, attention improves the accuracy of transcribing spoken language into text.

- Recommender Systems: Attention can be applied to understand user preferences better, thereby providing more personalized recommendations.

- Video Analysis: It allows models to focus on important frames or segments, improving tasks like action recognition and event detection.

- Medical Diagnosis: Attention mechanisms can highlight critical features in medical images, aiding in more accurate diagnoses and treatment plans.

What are the Advantages of Attention Mechanism?

Attention mechanisms are crucial in enhancing the performance of neural networks, especially in tasks involving sequential data. Here are the key benefits of implementing attention mechanisms in AI applications:

- Improved Performance: Attention layers allow models to focus on relevant parts of the input data, leading to better performance in tasks like natural language processing and computer vision.

- Contextual Understanding: They enhance the model’s ability to understand context, making it more effective in interpreting complex data relationships.

- Efficiency: Attention mechanisms reduce the need for extensive computational resources by selectively processing information, thus speeding up training times.

- Interpretability: These mechanisms provide insights into model decisions, allowing researchers to understand which parts of the data influence outcomes.

- Versatility: Attention can be applied across various domains, including text generation, translation, and image captioning, making it a valuable tool for AI researchers and engineers.

- Dynamic Focus: Models can dynamically adjust their focus based on input, improving adaptability and accuracy in real-time applications.

Are there any Drawbacks or Limitations Associated with Attention Mechanism?

While the Attention Mechanism offers many benefits, it also has limitations such as:

- Increased computational complexity, which can lead to longer training times.

- Potential overfitting on small datasets, as the model learns to focus too narrowly.

- Difficulty in interpreting attention scores, making model decisions less transparent.

These challenges can impact model performance and interpretability.

Can You Provide Real-life Examples of Attention Mechanism in Action?

For example, the Attention Mechanism is used by Google in their translation service to improve context understanding. This demonstrates how the model focuses on relevant words in a sentence, resulting in more accurate translations.

How does Attention Mechanism Compare to Similar Concepts or Technologies?

Compared to RNNs (Recurrent Neural Networks), the Attention Mechanism differs in its ability to process inputs in parallel rather than sequentially. While RNNs focus on previous inputs in a sequence, the Attention Mechanism is more effective for capturing long-range dependencies and contextual relationships in data.

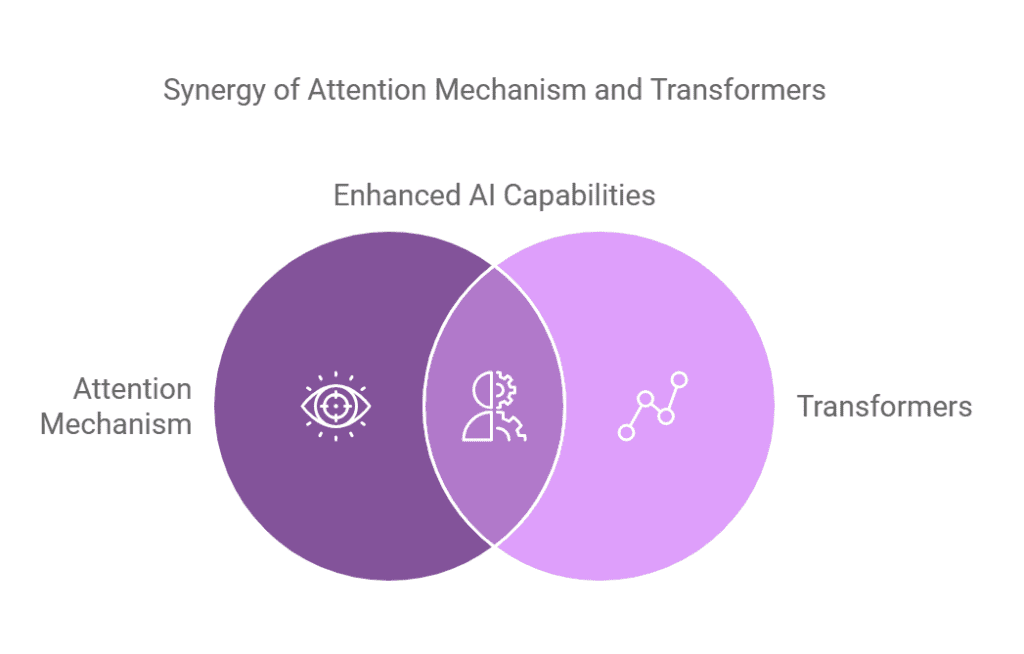

What are the Expected Future Trends for Attention Mechanism?

In the future, the Attention Mechanism is expected to evolve by integrating with more advanced architectures like Transformers. These changes could lead to improved handling of multi-modal data and better contextual understanding in AI applications.

What are the Best Practices for Using Attention Mechanism Effectively?

To use the Attention Mechanism effectively, it is recommended to:

- Choose the right architecture depending on the task.

- Regularize models to prevent overfitting.

- Experiment with different attention types to find the best fit.

Following these guidelines ensures better performance and more accurate model outputs.

Are there Detailed Case Studies Demonstrating the Successful Implementation of Attention Mechanism?

One notable case study is the implementation of the Attention Mechanism in the BERT model by Google. The results showed:

- Improved accuracy in natural language understanding tasks.

- Significant advancements in sentiment analysis.

This highlights the benefits achieved by using the Attention Mechanism in real-world applications.

What Related Terms are Important to Understand along with Attention Mechanism?

Related terms include Neural Networks and Transformers, which are crucial for understanding the Attention Mechanism because they provide the framework within which attention layers operate, influencing model performance and versatility.

What are the Step-by-step Instructions for Implementing Attention Mechanism?

To implement the Attention Mechanism, follow these steps:

- Define your model architecture, ensuring to include attention layers.

- Prepare your dataset, focusing on contextual relationships.

- Train your model, monitoring attention scores.

- Evaluate the model performance on test data.

These steps ensure a structured approach toward successful implementation.

Frequently Asked Questions

What is an attention mechanism in neural networks?

An attention mechanism is a technique that allows models to focus on specific parts of the input data.

- It helps in prioritizing relevant information.

- It improves the interpretation of complex data.

How do attention layers improve model performance?

Attention layers help models concentrate on important features.

- They reduce the impact of irrelevant data.

- They enhance the model’s ability to learn from context.

What are the benefits of using contextual attention?

Contextual attention provides a deeper understanding of data relationships.

- It allows for better decision-making in AI applications.

- It improves accuracy in tasks like translation and image recognition.

Can attention mechanisms be used in all types of neural networks?

Yes, attention mechanisms can be applied in various neural network architectures.

- They are commonly used in RNNs and CNNs.

- They are also effective in transformers.

How do attention mechanisms relate to sequence data?

Attention mechanisms excel in handling sequence data by focusing on relevant time steps.

- They help models remember important past information.

- They improve the processing of long sequences.

What role does attention play in natural language processing?

In NLP, attention mechanisms are critical for understanding context.

- They help in tasks like text summarization and translation.

- They improve the model’s ability to capture semantic meaning.

Are there different types of attention mechanisms?

Yes, there are several types of attention mechanisms.

- These include soft attention and hard attention.

- Each type has its own advantages and use cases.