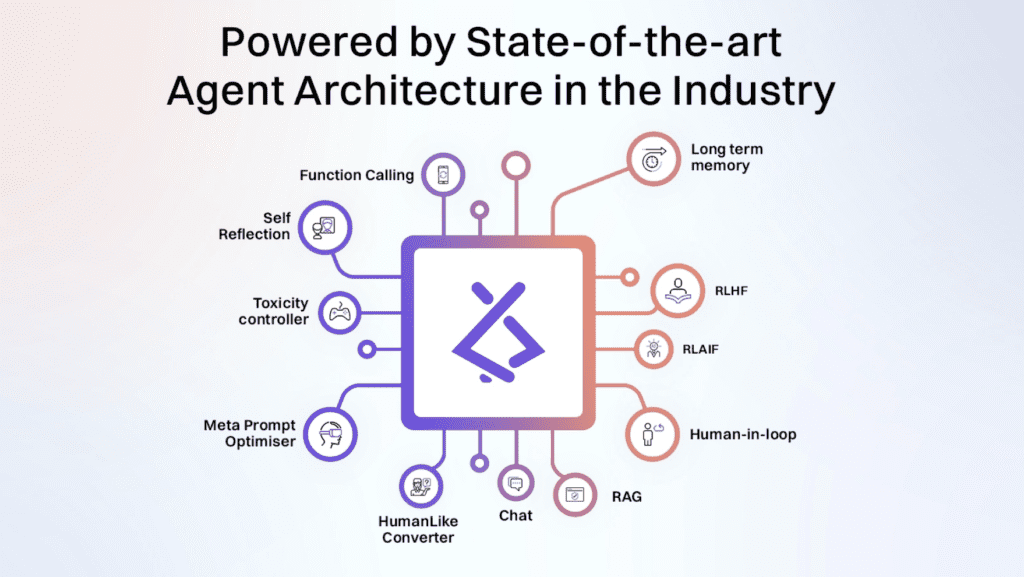

As the agent space continues to mature, there is a growing consensus within the community that large language models (LLMs) can be utilized beyond traditional chat interfaces and summarization tasks. Developers are now recognizing the potential of LLMs to build sophisticated agents capable of more complex functions, including inbuilt memory, reinforcement learning, retrieval-augmented generation (RAG) capabilities, and automated prompt optimization.

The journey of LAgMo began with our experimentation with GPT-4 at Lyzr. We developed one of the most advanced agent architectures, where agents are treated as first-class citizens, serving as the fundamental building blocks of an LLM application.

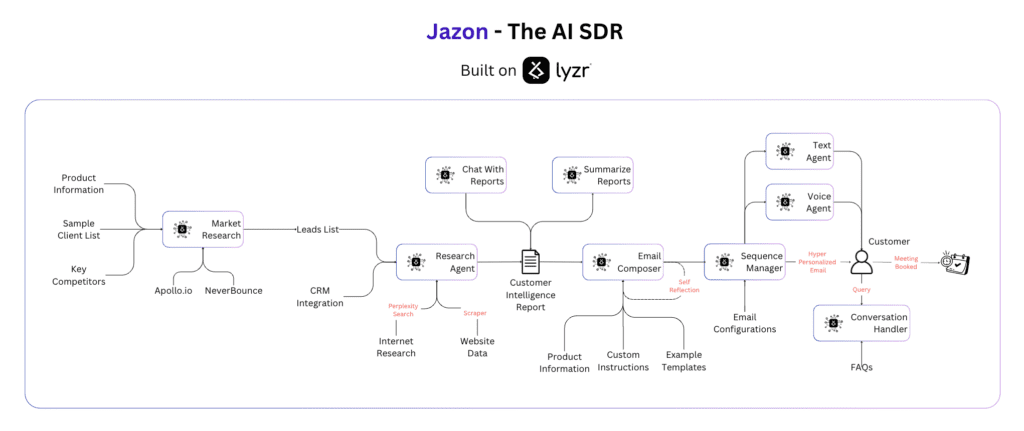

By assembling multiple agents, each with a specific purpose, tools, and instructions, these agents work collaboratively to complete tasks, improving recursively. This process can be likened to an assembly line, where tasks are performed sequentially, a common pattern in automated workflows and business processes. We launched Jazon – The AI SDR, an agentic automation template built using Lyzr Agents.

Our initial approach involved creating a master agent to orchestrate other agents, similar to what CrewAI is trying to do. However, we quickly realized that even with extensive instructions, examples, and a rigorous reinforcement learning cycle, this master agent had limitations and could not generate the agentflow with error rates often exceeding 70%. Consequently, we shifted our focus from building an overarching administrative agent to fine-tuning a large language model capable of assembling and configuring these agents automatically. This new direction aimed to achieve up to 80% accuracy in agent flow orchestration.

Designing LAgMo required us to identify the critical components necessary for its success. The first step involved converting instructions into a series of tasks. For example, if tasked with automating tax calculation, the initial agent would need to be broken down into smaller, manageable tasks. The second agent then iterates on these tasks, determining whether they could be further subdivided or combined into cohesive units.

In the third step, each final task was assigned to a dedicated agent. This involved creating an agent, defining its purpose, assigning the task, and providing the necessary tools and function-calling capabilities. Here, LAgMo plays a crucial role by creating prompts, personas, purposes, and instructions for each agent, ensuring they are well-equipped to perform their designated functions.

The fourth step involved assembling these agents into a cohesive workflow. We are resorting to a code block to execute this step. This step also included identifying and fixing errors recursively, particularly those related to input-output syntax. While agents excel at handling text, multi-modal tasks often present challenges, highlighting areas where further development is needed.

The final step was to evaluate the output to determine whether the agents successfully automated the workflow. This iterative process allows us to refine LAgMo continually, aiming for a high success rate in workflow execution.

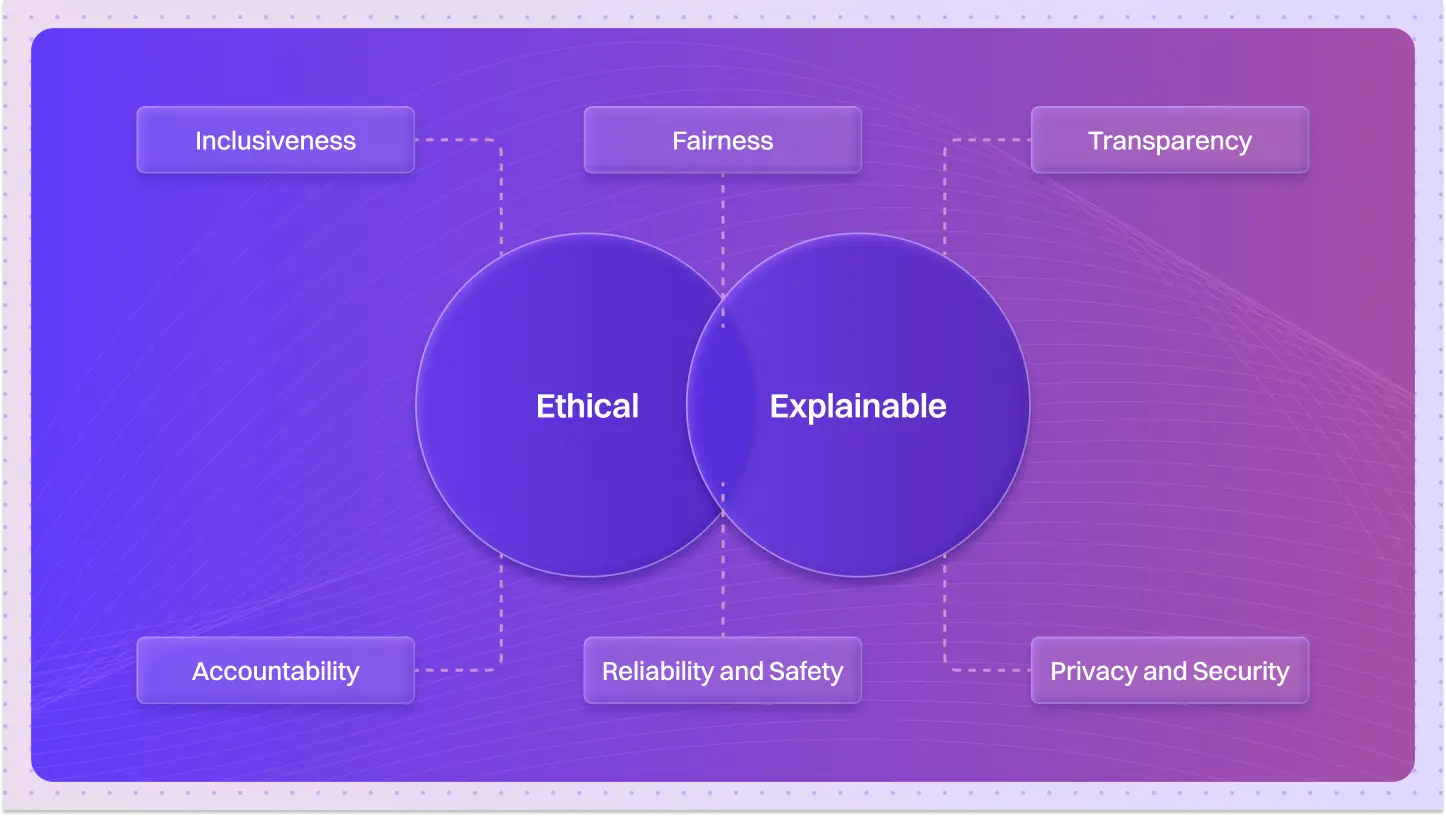

LAgMo’s core strength lies in its ability to convert tasks into a combination of purpose, instructions, and function-calling, all managed by specialized agents. This approach surpasses the capabilities of general-purpose LLMs, particularly in enabling features such as reinforcement learning from human feedback (RLHF), long-term memory, and self-reflection for specific tasks.

At Lyzr, we are committed to developing LAgMo as part of our broader agent studio. Our goal is to launch this powerful tool as both low-code (for developers) and no-code (for product and business users) once we achieve at least an 80% success rate in workflow execution. By leveraging LAgMo, we aim to revolutionize the way agents are built and deployed, providing a robust framework for automating complex tasks with unprecedented efficiency.

In summary, LAgMo represents a significant advancement in the field of artificial intelligence. By fine-tuning large language models to assemble and manage specialized agents, we can unlock new levels of automation and efficiency. This innovative approach addresses the limitations of traditional LLMs and paves the way for more sophisticated, capable agents. As we continue to refine and enhance LAgMo, we look forward to sharing this emerging concept with the developer community, driving the next wave of AI-powered solutions.

Book a demo to see Lyzr Agents in action.

Book A Demo: Click Here

Join our Slack: Click Here

Link to our GitHub: Click Here