What is ‘Loss Function’?

A loss function is a mathematical function that measures the difference between predicted outcomes and actual values in machine learning models. It quantifies the model’s error and guides the optimization process to improve performance during training.

How does the Loss Function operate in machine learning?

A loss function plays a central role in machine learning by:

- Quantifying Model Error: It calculates the difference between predicted and actual values.

- Guiding Optimization: The model adjusts its parameters using algorithms like gradient descent to minimize this error.

- Tailored Metrics: Different tasks require specific loss functions:

- Regression: Mean Squared Error (MSE)

- Classification: Cross-Entropy Loss

- Support Vector Machines: Hinge Loss

Key Steps:

- Evaluate Loss: Compute a scalar value representing model error.

- Update Weights: Use optimization algorithms to reduce the loss.

- Improve Accuracy: Refine predictions by iterating the process.

Benefits:

- Enables systematic improvement in predictions.

- Provides task-specific metrics for enhanced optimization.

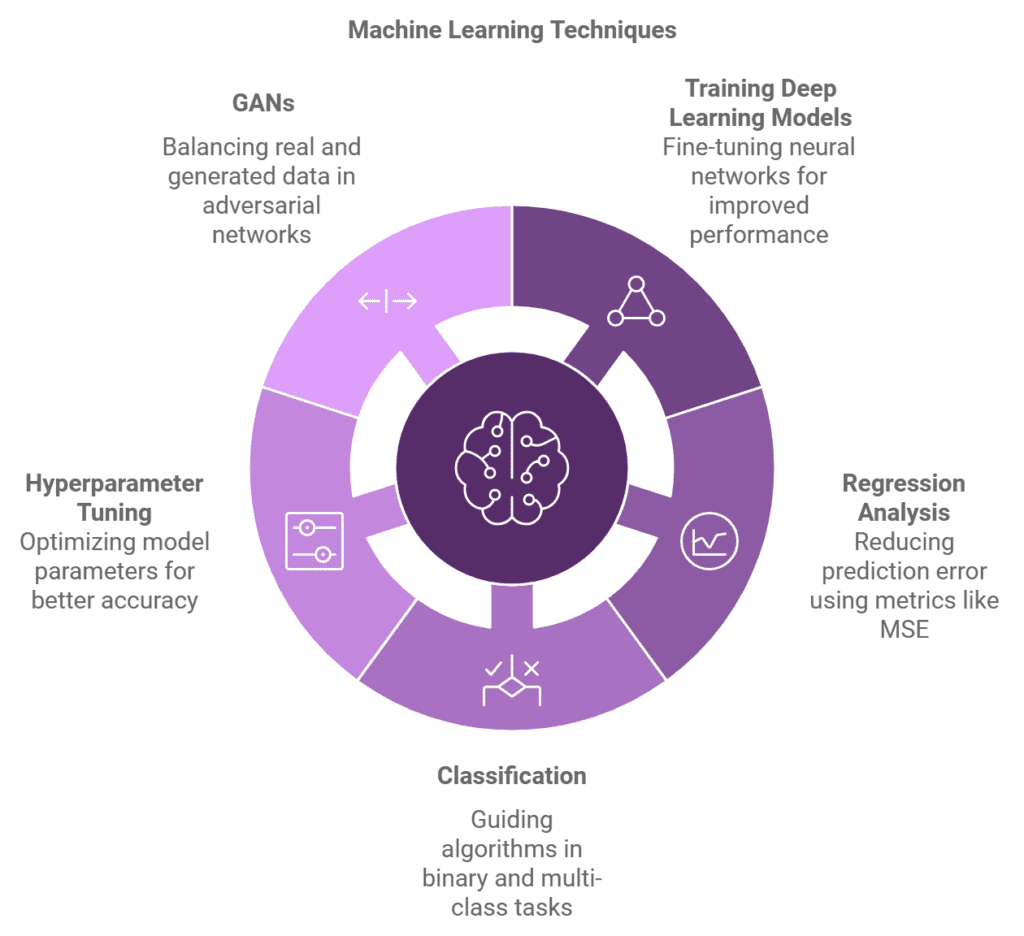

Common Uses and Applications

- Training Deep Learning Models: Essential for fine-tuning neural networks.

- Regression Analysis: Reduces prediction error using metrics like MSE.

- Classification: Guides algorithms in binary/multi-class prediction tasks.

- Hyperparameter Tuning: Measures performance to optimize parameters.

- GANs: Helps balance real vs. generated data in generative adversarial networks.

Advantages of Loss Functions

- Performance Assessment: Offers a quantifiable way to measure and improve accuracy.

- Optimization Guidance: Helps refine models by minimizing errors.

- Versatility: Tailored loss metrics for different use cases.

- Automation: Automates parameter adjustment through error feedback.

Drawbacks and Limitations

- Overfitting Risk: Certain functions might overfit training data.

- Choice Sensitivity: Performance depends on the selected loss function.

- Computational Intensity: Complex loss functions can be resource-heavy.

Real-Life Example

Google uses Cross-Entropy Loss to optimize its image recognition models. By reducing classification errors, the company improves accuracy in tasks like detecting objects and recognizing faces.

Comparison with Related Technologies

Unlike error rates, loss functions provide a continuous measure of error magnitude, enabling finer optimization during training.

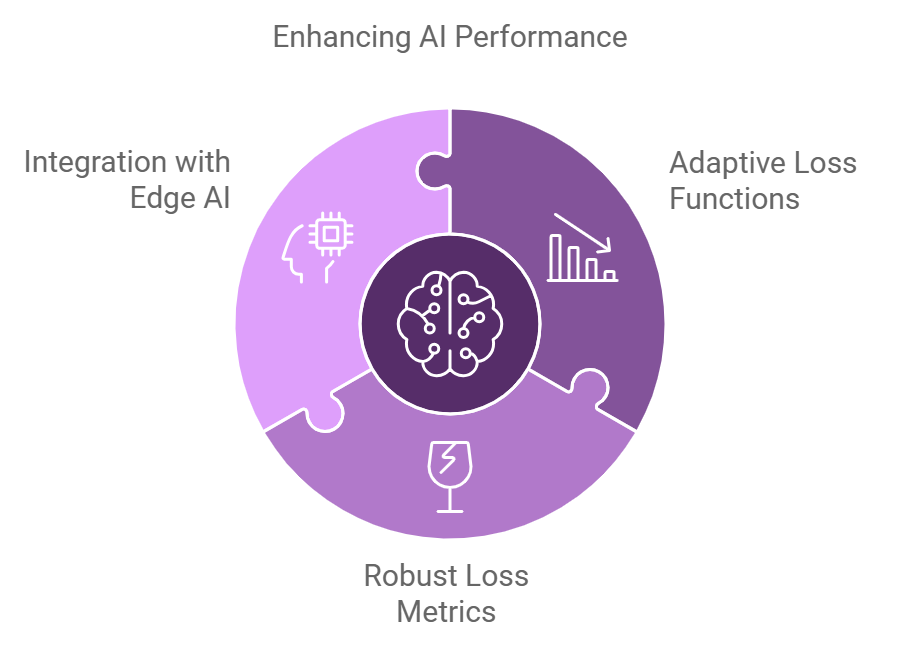

Future Trends

- Adaptive Loss Functions: Automatically adjust to evolving data.

- Robust Loss Metrics: Handle noise and outliers more effectively.

- Integration with Edge AI: Enhance performance in low-resource environments.

Best Practices

- Choose a loss function suited to the task (e.g., regression vs. classification).

- Regularly monitor loss during training for issues like overfitting.

- Experiment with multiple functions to find the best fit for your dataset.

Case Study

A financial firm improved its credit risk model using MSE to minimize errors. This resulted in a 20% boost in predictive accuracy, helping the firm make better lending decisions.

Key Related Terms

- Gradient Descent: Optimizes the model by minimizing the loss function.

- Regularization: Prevents overfitting by penalizing complex models.

Step-by-Step Implementation

- Define Task: Identify the problem type (regression/classification).

- Select Loss Function: Choose based on the task.

- Integrate into Model: Add to the training pipeline.

- Monitor Loss: Evaluate progress during training.

- Refine Model: Adjust parameters to minimize loss.

Frequently Asked Questions

Q: What is a loss function?

A: It quantifies the difference between predicted and actual values to guide model optimization.

Q: What are common types?

- Regression: Mean Squared Error (MSE)

- Classification: Cross-Entropy Loss

Q: How does it affect training?

It dictates weight updates and improves model predictions.

Q: Can I use multiple loss functions?

Yes, especially in multi-task learning.

Q: How to choose the right one?

Match the loss function to the problem type and data characteristics.