What is AI Model Monitoring?

AI Model Monitoring refers to the continuous observation and evaluation of machine learning models to ensure they perform as intended. This process helps detect issues like performance degradation, data drift, or bias, enabling proactive adjustments and updates to maintain model accuracy and reliability.

How does AI Model Monitoring Operate Effectively?

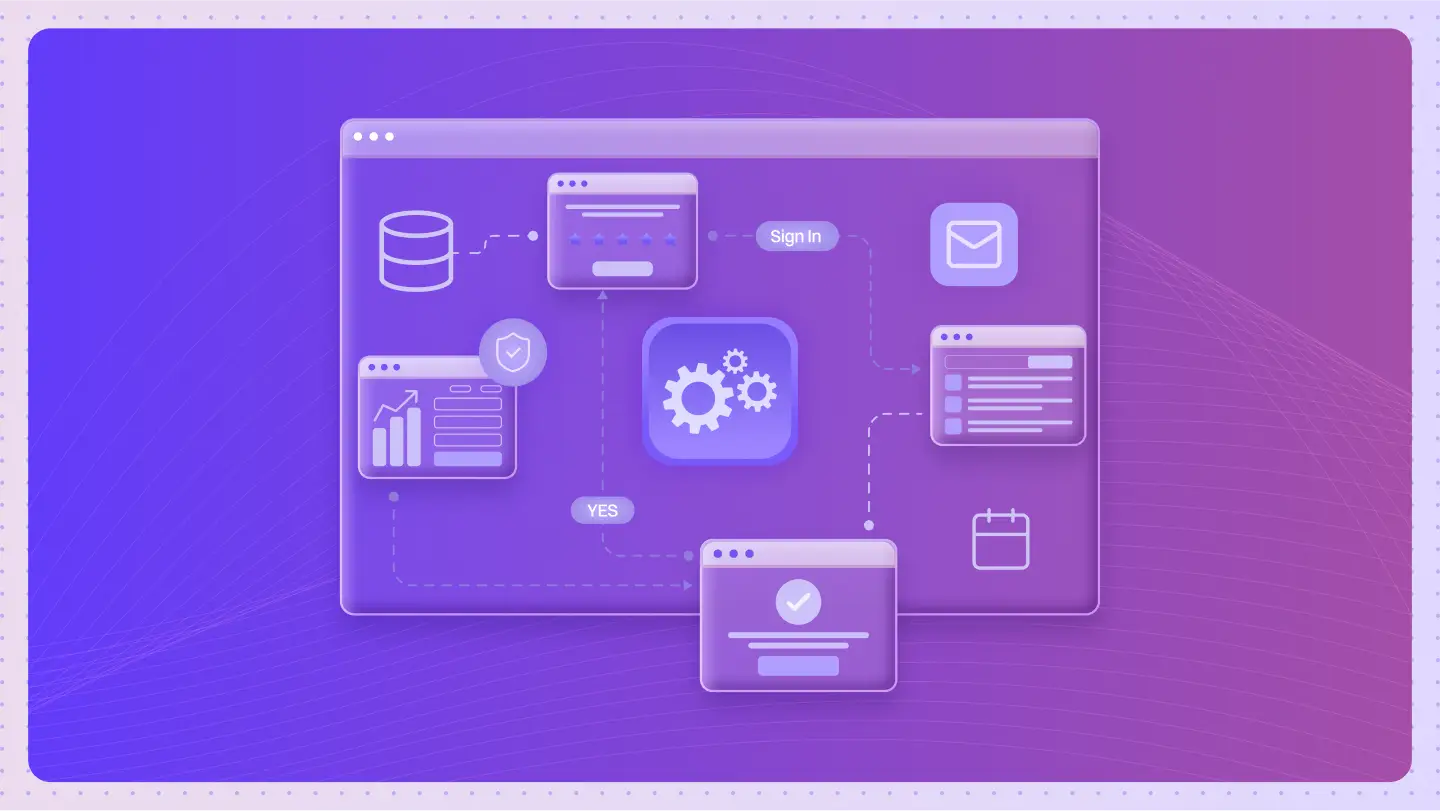

AI Model Monitoring functions through a structured and automated approach designed to maintain model performance over time. Key operational components include:

- Performance Metrics: Regular assessment of accuracy, precision, recall, F1 score, and other performance metrics to monitor model effectiveness.

- Data Drift Detection: Ongoing analysis of changes in data distributions that may affect model predictions.

- Real-time Monitoring Tools: Tools like MLflow, Prometheus, and Grafana track and visualize performance metrics in real-time.

- Alerts and Notifications: Automated systems trigger alerts when performance falls below predefined thresholds, allowing for quick intervention.

- Feedback Loops: Incorporating user feedback to fine-tune models based on real-world interactions.

- Retraining Strategies: Clear policies for when to retrain models based on performance metrics and data changes.

By monitoring these factors, AI Model Monitoring ensures long-term reliability and adaptability of machine learning systems.

Common Uses and Applications of AI Model Monitoring

- Performance Evaluation: Continuously monitoring model performance to ensure optimal functioning.

- Anomaly Detection: Identifying unexpected changes in model behavior for quick resolution.

- Bias Mitigation: Tracking model outputs for potential biases and ensuring ethical AI practices.

- Drift Detection: Monitoring changes in input data to detect and address data drift.

- Resource Optimization: Ensuring efficient use of computational resources through monitoring and scaling adjustments.

- Regulatory Compliance: Ensuring adherence to industry standards by documenting monitoring practices.

- Improving User Experience: Enhancing customer satisfaction by maintaining accurate and responsive AI systems.

Advantages of AI Model Monitoring

- Improved Performance: Early detection of performance issues allows for optimization and tuning.

- Enhanced Reliability: Continuous tracking ensures consistent model outputs over time.

- Proactive Issue Resolution: Identifies and addresses potential problems before they affect users.

- Data Drift Detection: Detects shifts in input data, helping maintain model relevance.

- Regulatory Compliance: Ensures AI models meet industry and legal standards.

- Informed Decision-Making: Offers insights into model behavior, aiding strategic and operational decisions.

- Cost Efficiency: Minimizes expenses by avoiding model failures through regular monitoring.

Drawbacks or Limitations Associated with AI Model Monitoring

- Resource Intensity: Continuous monitoring can be computationally expensive and resource-demanding.

- Complexity: Setting up and maintaining monitoring systems requires specialized knowledge and infrastructure.

- Data Drift: Models may degrade over time as data changes, requiring frequent retraining or updates.

Real-life Examples of AI Model Monitoring

- Fraud Detection in Finance: A financial services company continuously monitors fraud detection models, allowing for rapid detection and adaptation to new fraud patterns. This ensures models remain accurate in identifying suspicious transactions.

- Healthcare Predictive Models: AI Model Monitoring is used to track the effectiveness of patient risk assessment models, improving predictive accuracy and resource allocation.

How does AI Model Monitoring Compare to Similar Technologies?

AI Model Monitoring differs from traditional model evaluation methods by focusing on continuous, real-time performance tracking, as opposed to periodic checks. This ensures faster detection of issues and a more adaptive approach to maintaining model reliability.

Future Trends in AI Model Monitoring

- Advanced Analytics: Incorporating more sophisticated algorithms for better anomaly detection.

- Cloud Integration: Utilizing cloud platforms for scalability and remote monitoring.

- User-Friendly Dashboards: Enhancing the visibility of performance metrics with intuitive dashboards.

Best Practices for Effective AI Model Monitoring

- Define Clear Metrics: Ensure performance metrics align with business objectives.

- Automated Alerts: Implement alerts for critical performance drops.

- Regular Reviews: Schedule routine evaluations to assess model health.

- Cross-functional Collaboration: Engage data scientists, engineers, and business stakeholders for well-rounded insights.

Step-by-step Instructions for Implementing AI Model Monitoring

- Identify Key Metrics: Choose metrics like accuracy, precision, recall, and F1 score based on model goals.

- Select Monitoring Tools: Use platforms like MLflow, Prometheus, or Grafana for real-time tracking and visualization.

- Set up Alerts: Configure alert systems to notify teams of performance drops or anomalies.

- Schedule Model Reviews: Periodically review model performance to make necessary adjustments.

- Implement Feedback Loops: Use feedback from users or stakeholders to refine the model evaluation.

- Establish Retraining Policies: Define conditions for retraining models to ensure long-term effectiveness.