What is Explainable AI?

Explainable AI (XAI) refers to AI systems that provide clear insights into their decision-making processes. This transparency is crucial for building trust and understanding in AI applications, ensuring users can interpret and rely on AI outcomes.

How does Explainable AI Operate for Better Insights?

Explainable AI (XAI) operates by providing transparency and understanding into AI decision-making processes. Its functionality can be broken down into several key aspects:

- Transparency: XAI models are designed to be interpretable, allowing users to understand how decisions are made.

- Trust: By explaining predictions, users can build trust in AI systems, ensuring that decisions are reliable and based on sound reasoning.

- Insight Generation: Implementing XAI techniques, such as LIME or SHAP, helps in deriving meaningful insights from complex models, which can guide future decision-making.

- Regulatory Compliance: XAI assists organizations in adhering to regulations that require explainability in AI, fostering responsible AI usage.

- User Engagement: By involving stakeholders in the AI development process through explainability, organizations can enhance user experience and acceptance.

In summary, Explainable AI functions by making AI systems more transparent, interpretable, and trustworthy, ultimately leading to better insights and understanding of AI behavior.

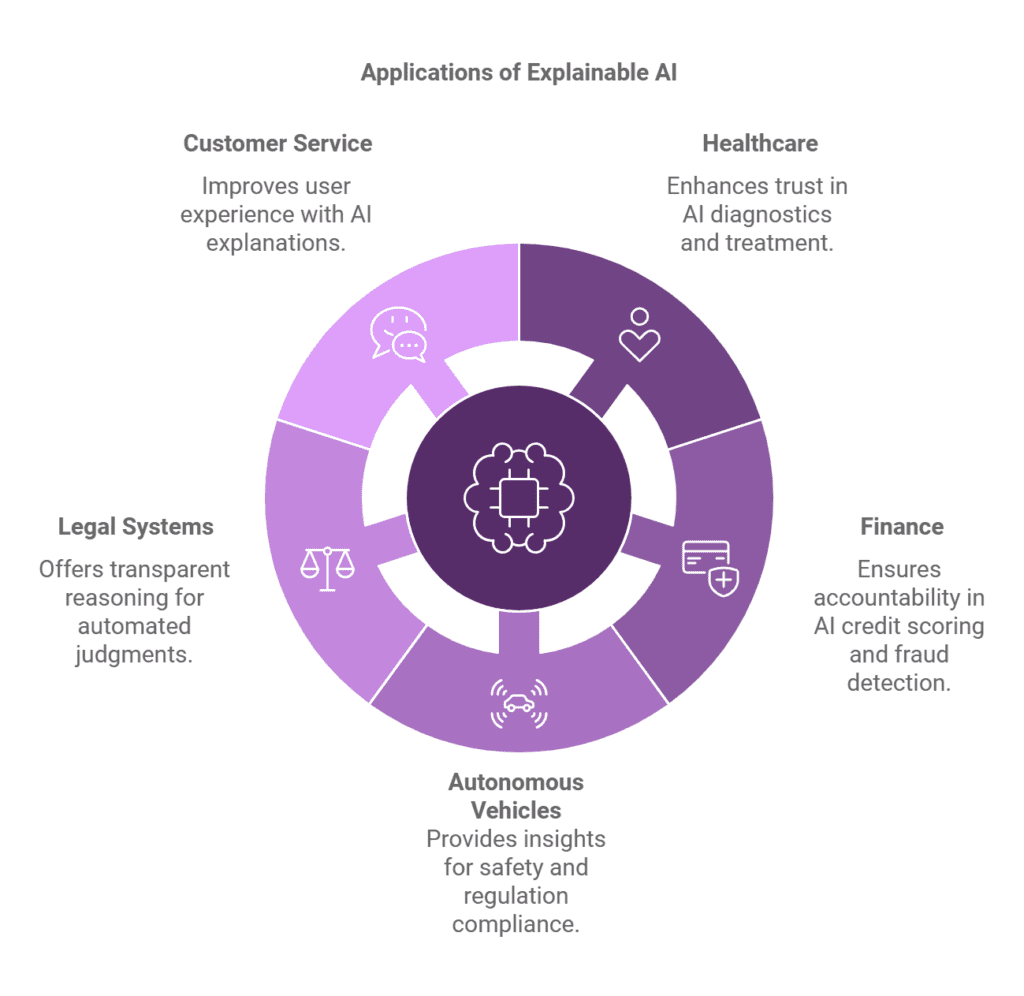

Common Uses and Applications of Explainable AI

Explainable AI (XAI) is crucial in today’s data-driven landscape, providing transparency and trust in AI systems. Its main applications include:

- Healthcare: Enhances trust in AI-driven diagnostics and treatment recommendations by making model decisions clear to healthcare professionals and patients.

- Finance: Assists compliance officers in understanding AI-based credit scoring and fraud detection, ensuring accountability and reducing biases.

- Autonomous Vehicles: Provides insights into decision-making processes, ensuring safety and compliance with regulations.

- Legal Systems: Supports legal professionals by offering transparent reasoning behind AI-generated outcomes, increasing trust in automated judgments.

- Customer Service: Improves user experience by allowing businesses to explain AI-driven recommendations and decisions to clients, enhancing satisfaction.

Implementing explainable AI fosters better insights, understanding, and interpretability in AI systems, leading to more ethical and reliable technology.

Advantages of Explainable AI

Explainable AI (XAI) is crucial in today’s technology landscape, offering numerous benefits that enhance the usability and trustworthiness of AI systems. Here are some key advantages:

- Transparency: Promotes understanding of AI decision-making processes, which builds trust among users.

- Accountability: Facilitates the identification of errors or biases in AI outputs, ensuring responsible AI use and data privacy.

- Regulatory Compliance: Aids organizations in meeting compliance standards by providing clear explanations of AI behavior.

- User Engagement: Enhances user experience by allowing stakeholders to comprehend the rationale behind AI decisions.

- Improved Insights: Enables data scientists and AI developers to refine models based on interpretable outcomes, leading to better performance.

- Risk Mitigation: Reduces the potential for harmful consequences by allowing for a thorough analysis of AI actions.

- Broader Adoption: Encourages wider acceptance of AI technologies across various sectors due to increased trust and understanding.

Drawbacks or Limitations of Explainable AI

While Explainable AI offers many benefits, it also has limitations such as:

- Increased complexity in model design.

- Potential trade-offs in performance versus interpretability.

- Difficulty in explaining certain black-box models.

Real-Life Examples of Explainable AI in Action

For example, Explainable AI is used by financial institutions to assess credit risk. By providing clear rationale for lending decisions, they ensure compliance with regulations and build trust with customers. This demonstrates how transparency can enhance customer relationships and regulatory adherence.

How does Explainable AI Compare to Similar Technologies?

Compared to traditional AI models, Explainable AI differs in its focus on transparency and interpretability. While traditional models might prioritize accuracy, Explainable AI aims to provide insights into decision-making processes, making it more suitable for regulated industries.

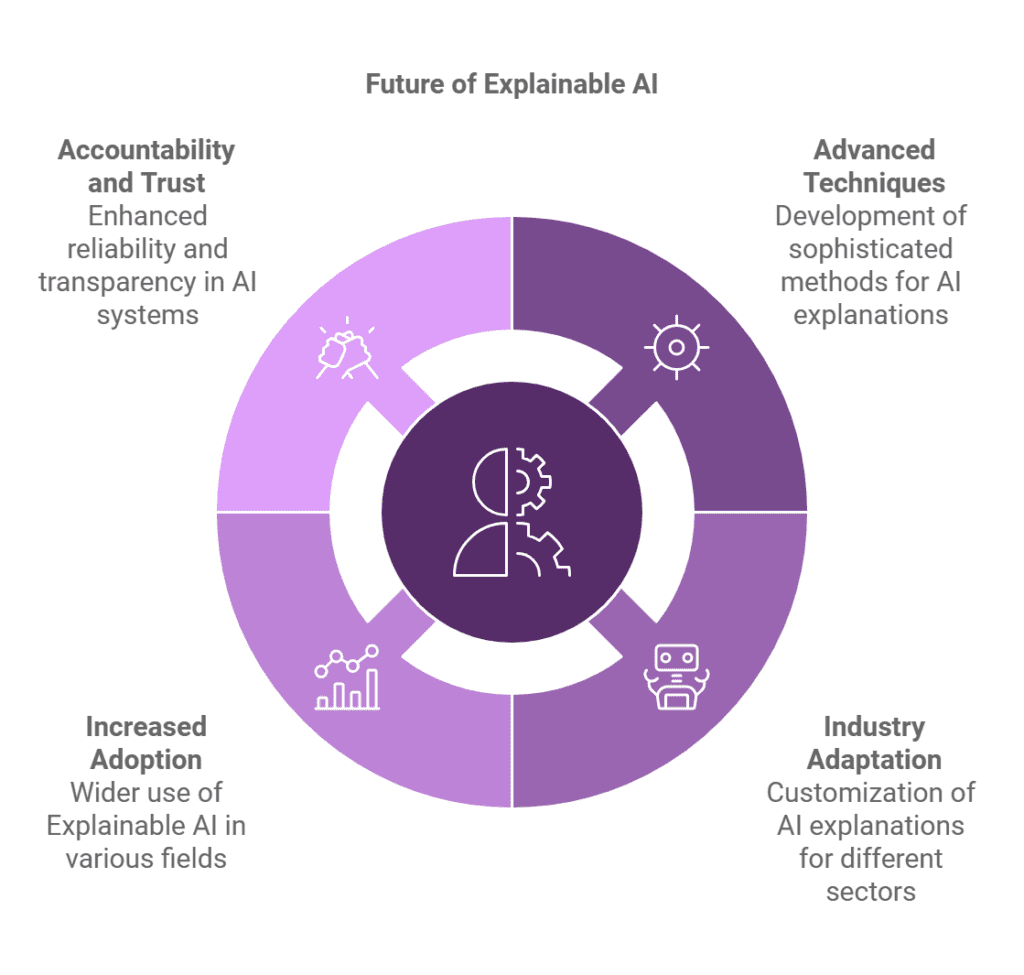

Future Trends for Explainable AI

In the future, Explainable AI is expected to evolve by incorporating more advanced techniques for generating explanations and adapting to various industry needs. These changes could lead to increased adoption in sectors like healthcare and finance, promoting accountability and trust.

Best Practices for Using Explainable AI Effectively

To use Explainable AI effectively, it is recommended to:

- Choose the right model based on the need for interpretability.

- Implement user-friendly visualization tools.

- Regularly update explanations based on model changes.

Following these guidelines ensures that stakeholders can understand and trust AI decisions.

Case Studies Demonstrating Successful Implementation of Explainable AI

One case study involves a healthcare provider using Explainable AI to predict patient outcomes. They integrated model explanations to clarify treatment recommendations, which resulted in improved patient trust and satisfaction. The implementation of Explainable AI led to better decision-making and enhanced patient care.

Related Terms to Understand along with Explainable AI

Related terms include Interpretability and Transparency, which are crucial for understanding Explainable AI because they highlight the importance of clarity in AI systems and the need for models that can be understood by users and stakeholders.

Step-by-Step Instructions for Implementing Explainable AI

Explainable AI refers to methods and techniques in AI that make the results understandable to humans. Here’s how to implement it:

- Choose Interpretable Models: Use models that are inherently interpretable, such as decision trees or linear models.

- Apply Post-Hoc Techniques: For more complex models, apply post-hoc explanation methods like LIME or SHAP to provide explanations for model predictions.

- User-Friendly Visualizations: Implement visual tools to make AI outcomes understandable for non-experts.

- Regular Updates: Continuously update the explanations as the models evolve to ensure data security and transparency remain intact.

Frequently Asked Questions

How does explainable AI support trust and accountability?

Explainable AI provides transparency by explaining AI decisions, which helps users understand and trust the AI outcomes. This is crucial for compliance with regulations and ensuring ethical AI usage.

Who benefits from Explainable AI?

Various stakeholders benefit from Explainable AI:

- Data Scientists: Gain better insights into model behavior, improving model performance.

- Compliance Officers: Ensure adherence to legal standards by having clear reasoning for AI decisions.

- End Users: Experience more trust and understanding when interacting with AI systems.